Radiology AI/ML products have the potential to revolutionize the field, but bringing them to market is not without its challenges. There are software engineering challenges, integration challenges, and then there’s the FDA. On top of all of these, there’s the problem of product-market fit.

In this article, we share some hard-earned lessons about how to find product-market fit for Radiology AI/ML applications.

If you’re developing Software as a Medical Device (SaMD) that incorporates AI/ML and need help with regulatory clearance or software engineering, we can accelerate your time to market.

“It’s The Workflow, Stupid” 🔗

At a conference in mid-2023, a prominent radiologist bemoaned how most AI/ML applications in the radiologist space are “useless” because they don’t integrate with the radiologists’ workflow. “It’s The Workflow, Stupid”. If you’re building an application for radiologists, you must understand their workflow.

Radiologists are a pivotal part of the healthcare ecosystem, reading many cases each day. They operate under constant pressure and growing case loads. Many institutions measure radiologist productivity, in part, based on Relative Value Units (RVUs). Relative value units (RVUs) are a measure of value used in the United States Medicare reimbursement formula for physician services. Here are some RVU values for a few types of reads:

| CPT Code | Description | Total RVU (2023) |

|---|---|---|

| 70100 | X-Ray Exam of Jaw (< 4 Views) | 1.12 |

| 70110 | X-Ray Exam of Jaw (≥ 4 Views) | 1.27 |

| 70450 | CT Head/Brain without Dye | 3.33 |

| 70460 | CT Head/Brain with Dye | 4.68 |

| 77067 | Screening Mammogram | 3.85 |

| 77065 | Diagnostic Mammogram | 3.76 |

| 77300 | Radiation Therapy Dose Plan | 1.93 |

| 77301 | Radiation Therapy Dose Plan IMRT | 55.46 |

| 78201 | Liver Imaging | 5.61 |

Thus, both due to the volume of cases being read, and the compensation models, every second and every extra click counts. When developing a new AI/ML application, you must consider the workflow very carefully. We suggest interviewing a few radiologists who are potential end-users about their existing workflows early in your design process.

Radiologists, burdened with demanding schedules, are unlikely to use new web applications to read cases. They may consider using a specialized user interface for large numbers of similar reads if the application saves a lot of time. However, creating such an application requires developers to have an intimate understanding of user behavior, workflow, and specific needs.

Latency in the movement of imaging data is another critical workflow issue that can render an application useless.

Appropriate use of DICOM, HL7, and FHIR is another factor. For examples, many early AI applications simply generated PDF reports and stuck them secondary capture DICOM objects, this approach might not offer the best workflow integration. More advanced DICOM options such as Structured Measurement Reports (TID 1500) could potentially deliver a better, more seamless workflow experience. The key lies in striking a balance between user convenience, algorithmic efficiency, and seamless workflow integration, to ensure that AI augments the critical work that radiologists do, rather than adding to their burden.

Workflow will be a major issue for most AI applications, even beyond radiology. I like to summarize this with a short equation:

AI APP VALUE = AI ACCURACY - WORKFLOW DISRUPTION

Thus, you can improve the value of an AI application by increasing AI accuracy or integrating it more seamlessly into the workflow. For example, I use Notion to write articles for our website. Notion (at this time) uses GPT-3.5, which isn't as good as ChatGPT (which uses GPT-4). However, since Notion integrates the AI more directly into my writing workflow and GPT-3.5 is “good enough” most of the time, I end up using it more often than ChatGPT.

GitHub Co-Pilot is another example of an application that has added value by integrating AI more seamlessly into the software engineer’s workflow.

IT Nightmares 🔗

Installing a new software services within radiology centers is often a massive undertaking, potentially extending from months to years. The IT process can be arduous, even with strong support from the clinical users, primarily due to the workload and priorities of the IT departments at these centers. Their primary focus is to ensure the existing clinical workflows continue to function smoothly without disruptions. The new projects often are secondary, and there tend to be many of them, and each project has many other busy stakeholders too.

Cybersecurity has become an increasingly large concern. Besides all of the cybersecurity requirements that the FDA places upon you, also be prepared to answer the IT departments questions. Addressing the items within the MDS2, or "Manufacturer Disclosure Statement for Medical Device Security," is a good place to start. Note you don’t need to check all of the boxes in this document, but each IT department will have their own non-negotiables.

While there's a consensus that cloud technology represents the future of data storage and processing, a majority of institutions continue to operate on-premise PACS, creating a challenging situation for manufacturers. To cater to a diverse client base, manufacturers may still need to offer and maintain both on-premise and cloud solutions.

In light of these challenges, manufacturers may consider deploying via an AI platform such as Nuance, AI Doc, Blackford, or deepcOS. These platforms manage IT installation, governance, and other concerns, supporting both on-premise and cloud deployments. Typically, the manufacturer provides a mechanism to 'dockerize' the AI model, which the platform then integrates with the necessary PACS, EHR, RIS, or reporting systems. However, it is important to note that each platform comes with its own SDK and deploying via these platforms may restrict customization of the UI or workflow.

Finally, it's essential to acknowledge that AI model performance can shift over time. Therefore, sophisticated clients will appreciate an inbuilt mechanism to monitor the quality of AI models continually. Providing such a feature can enhance client trust and satisfaction, further strengthening the manufacturer-client relationship in the long run.

Don’t Build Something Nobody Wants 🔗

It is vital that you comprehend your value proposition and understanding what your users want. This lesson is taken from startups 101, yet so many startups fail to grasp it. It's disheartening to pour resources into building a device, navigating the complicated process of FDA-clearance, only to discover that we've built a product that doesn't meet the market's needs. Startups need to avoid the heartbreak and financial fallout of creating the wrong product by thoroughly researching the market and talking to potential customers before embarking on product development.

For example, consider relatively low-risk triage applications. These applications may re-order the radiologists worklist to ensure they get to the most urgent reads first. These applications can be beneficial when there's a substantial backlog to contend with, but if an organization already has sub-hour read times for most procedures, then most clinical applications won’t benefit too much by reducing the read time further. One notable exception is stroke cases, where every minute truly counts.

Similarly, the utility of AI tools is impacted by how they are perceived by end-users. Radiologists, for instance, are unlikely to appreciate spending an additional 15 seconds reviewing an AI model's output when they could have interpreted the original x-ray in less time. Furthermore, AI tools with a narrow focus can often be less useful than more comprehensive ones. Having to review the outputs of numerous specialized tools can become burdensome and counterproductive.

Contrary to what one might expect, it's often the more mundane applications, such as generating reports or streamlining sluggish workflows, that make the most significant impact. These seemingly 'boring' solutions can enhance productivity, save time, and ultimately result in more efficient service delivery.

Finally, it's worth noting that traditional metrics like PPV and sensitivity can sometimes be misleading. Although it's easy to concentrate on these metrics (as they’re often emphasized in the literature), the real value can lie in other places. For example, while a 90% overall sensitivity might sound impressive, if your model can confidently identify the 40% of cases where it's almost certainly correct, it could prove to be far more clinically useful. This ability to accurately predict its performance across a range of situations, rather than achieving a high but uncertain score, is where the true utility of a model lies.

Conclusion 🔗

I hope these hard-earned lessons are useful for you if you’re building an AI-drive medical device or SaMD. If you have any questions, we’d be happy to talk.

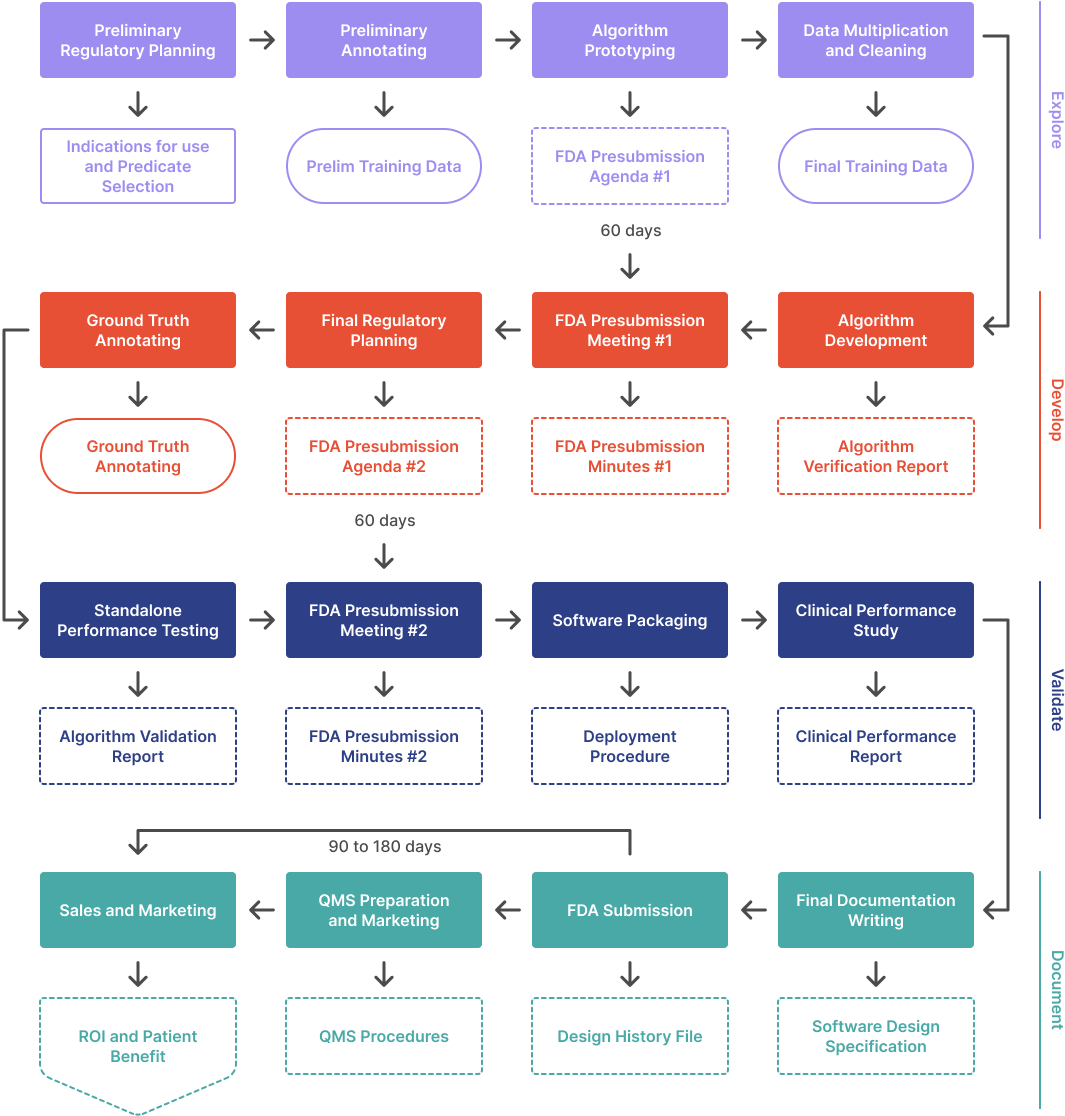

In this article, I’ve focused on the product-market fit, but there are many other software-engineering and regulatory lessons we can share as well. Some of these are addressed in Yujan’s fantastic article, AI/MML from Idea to FDA.

Parts of this article were drafted with Notion’s AI tools, but all of it was reviewed by the author.