Innolitics introduction 🔗

Innolitics provides US FDA regulatory consulting to startups and established medical-device companies. We’re experts with medical-device software, cybersecurity, and AI/ML. See our services and solutions pages for more details.

We have practicing software engineers on our team, so unlike many regulatory firms, we speak both “software” and “regulatory”. We can guide your team through the process of writing software validation and cybersecurity documentation and we can even accelerate the process and write much of the documentation for you (see our Fast 510(k) Solution).

About this Transcript 🔗

This document is a transcript of an official FDA (or IMDRF) guidance document. We transcribe the official PDFs into HTML so that we can share links to particular sections of the guidance when communicating internally and with our clients. We do our best to be accurate and have a thorough review process, but occasionally mistakes slip through. If you notice a typo, please email a screenshot of it to Mihajlo at mgrcic@innolitics.com so we can fix it.

Preamble 🔗

Document issued on January 7, 2025.

For questions about this document regarding CDRH-regulated devices, contact the Digital Health Center of Excellence at digitalhealth@fda.hhs.gov. For questions about this document regarding CBER-regulated devices, contact the Office of Communication, Outreach, and Development (OCOD) at 1-800-835-4709 or 240-402-8010, or by email at ocod@fda.hhs.gov. For questions about this document regarding CDER-regulated products, contact druginfo@fda.hhs.gov. For questions about this document regarding combination products, contact the Office of Combination Products at combination@fda.gov.

Contains non-binding guidance.

I. Introduction 🔗

FDA has long promoted a total product life cycle (TPLC) approach to the oversight of medical devices, including artificial intelligence (AI)-enabled devices, and has committed to developing guidances and resources for such an approach. Some recent efforts include developing guiding principles for good machine learning practice (GMLP)1 and transparency for machine learning-enabled devices2 to help promote safe, effective, and high-quality machine learning models; and a public workshop on fostering a patient-centered approach to AI-enabled devices, including discussions of device transparency for users.3 This guidance intends to continue these efforts, by providing lifecycle management and marketing submission recommendations consistent with a TPLC approach for AI-enabled devices.

This guidance provides recommendations on the contents of marketing submissions for devices that include AI-enabled device software functions including documentation and information that will support FDA’s review. To support the development of appropriate documentation for FDA’s assessment of devices, this guidance also provides recommendations for the design and development of AI-enabled devices that manufacturers may consider using throughout the TPLC. The recommendations reflect a comprehensive approach to lifecycle management of AI-enabled devices throughout the TPLC. Furthermore, the guidance includes FDA’s current thinking on strategies to address transparency and bias throughout the TPLC of AI-enabled devices, including by collecting evidence to evaluate whether a device benefits all relevant demographic groups (e.g., race, ethnicity, sex, and age) similarly, to help ensure that these devices remain safe and effective for their intended use.

The emergence of consensus standards related to software has helped to improve the consistency and quality of software development and documentation, particularly with respect to activities such as risk assessment and management. When possible, FDA harmonized the terminology and recommendations in this guidance with software-related consensus standards.The Agency encourages the consideration of such FDA-recognized consensus standards when developing AI-enabled devices and preparing premarket documentation. For the current edition of the FDA-recognized consensus standards referenced in this document, see the FDA Recognized Consensus Standards Database. If submitting a Declaration of Conformity to a recognized standard, we recommend including the appropriate supporting documentation. For more information regarding use of consensus standards in regulatory submissions, refer to the FDA guidance titled “Appropriate Use of Voluntary Consensus Standards in Premarket Submissions for Medical Devices” and “Standards Development and the Use of Standards in Regulatory Submissions Reviewed in the Center for Biologics Evaluation and Research”.

In general, FDA’s guidance documents do not establish legally enforceable responsibilities. Instead, guidances describe the Agency’s current thinking on a topic and should be viewed only as recommendations, unless specific regulatory or statutory requirements are cited. The use of the word should in Agency guidances means that something is suggested or recommended, but not required.

II.Scope 🔗

For purposes of this guidance, FDA refers to a software function that meets the definition of a device as a “device software function.”A “device software function” is a software function that meets the device definition in section 201(h) of the Federal Food, Drug, and Cosmetic Act (FD&C Act).4 As discussed in other FDA guidance, the term “function” is a distinct purpose of the product, which could be the intended use or a subset of the intended use of the product.5

AI-enabled devices are devices that include one or more AI-enabled device software functions (AI-DSFs). An AI-DSF is a device software function that implements one or more “AI models” (referred to as “models” in this guidance) to achieve its intended purpose.A model is a mathematical construct that generates an inference or prediction based on new input data. In this guidance, when “AI-enabled device” is used, it refers to the whole device, whereas when “AI-DSF” is used, it refers only to the function that uses AI. In this guidance, when “model” is used, it refers only to the mathematical construct.

To continue to support the development of AI enabled devices, this guidance provides recommendations on the documentation and information that should be included in marketing submissions to support FDA’s review of devices that include AI-DSFs. For purposes of this guidance, the term “marketing submission” refers to premarket notification (510(k)) submission, De Novo classification request, Premarket Approval (PMA) application, Humanitarian Device Exemption (HDE), or Biologics License Application (BLA).6 Some of the proposed recommendations in this guidance also may apply to Investigational Device Exemption (IDE) submissions. For AI-enabled devices subject to 510(k) requirements, an AI-enabled device can be found substantially equivalent to a non-AI-enabled device with the same intended use provided, among other things, the AI-enabled device does not introduce different questions of safety and effectiveness compared to the non-AI-enabled device and meets other requirements for a determination of substantial equivalence in accordance with section 513(i) of the FD&C Act.

Generally, the recommendations in this guidance also apply to the device constituent part7 of a combination product8 when the device constituent part includes an AI-DSF. In developing an AI-DSF, sponsors should consider the impact of the AI-DSF in the context of the combination product as a whole. For a combination product that includes an AI-DSF, we highly encourage early engagement with the FDA lead review division for the combination product.9 In accordance with the Inter-Center consult process, the FDA lead review division will consult the appropriate subject matter experts.10 FDA recommends that sponsors refer to other guidances for recommendations on other aspects of investigational considerations and marketing submissions for combination products.11

The recommendations proposed within this guidance are based on FDA’s experience with reviewing a variety of AI-enabled devices, as well as current regulatory science research.

While the proposed recommendations are intended to be broadly applicable to AI-enabled devices, many of these recommendations may be specifically relevant to devices that incorporate the subset of AI known as machine learning, particularly deep learning and neural networks. Additional considerations may apply for other forms of AI.

In some cases, this guidance highlights recommendations from other guidances in order to assist manufacturers with applying those recommendations to AI-enabled devices. The inclusion of certain recommendations in this guidance does not negate applicable recommendations in other guidances that may not be included. This guidance should be considered in the context of the FD&C Act, its implementing regulations, and other guidance documents.

This guidance is not intended to provide a complete description of what may be necessary to include in a marketing submission for an AI-enabled device. In particular, this guidance references sections of the FDA guidance titled “Content of Premarket Submissions for Device Software Functions” (hereafter referred to as “Premarket Software Guidance”),which includes significant additional considerations for AI-enabled devices, but does not include references to every section of that guidance. Additionally, this guidance does not address all of the data and information to be submitted in support of a specific indication for an AI-enabled device. FDA recommends that sponsors also refer to other guidances, as applicable to a particular device, for recommendations on other aspects of a marketing submission. Examples of relevant guidances for specific technologies include the FDA guidances titled “Technical Performance Assessment of Quantitative Imaging in Radiological Device Premarket Submissions” and “Technical Considerations for Medical Devices with Physiologic Closed-Loop Control Technology.” FDA further encourages sponsors to consider other available resources including consensus standards and publicly available information when preparing their marketing submissions. As with all devices, FDA intends to take a risk-based approach to determining specific testing and applicable recommendations to support marketing submissions for AI-enabled devices.

Early engagement with FDA can help guide product development and submission preparation. In particular, early engagement could be helpful when new and emerging technology is used in the development or design of the device, or when novel methods are used during the validation of the device. FDA encourages sponsors to consider discussing these plans with FDA via the Q-Submission Program.12

III. TPLC Approach: General Principles 🔗

This guidance acknowledges the importance of a TPLC approach to the management of AI-enabled devices. In addition to recommendations regarding the documentation and information that should be included in marketing submissions, which reflect a comprehensive approach to the management of risk throughout the TPLC, the resources provided in this guidance are also intended to assist with the device development and lifecycle management of AI-enabled devices, which should help support the safety and effectiveness of these devices. This guidance provides both specific recommendations on the information and documentation to support a marketing submission for an AI-enabled device, as well as recommendations for the design, development, deployment, and maintenance of AI-enabled devices, including the performance management.13

This guidance also includes FDA’s current thinking on strategies to address transparency and bias throughout the TPLC of AI-enabled devices. These interconnected considerations are important throughout the TPLC and should be incorporated from the earliest stage of device design through decommission to help design transparency and the control of bias into the device and ensure its safety and effectiveness. Transparency involves ensuring that important information is both accessible and functionally comprehensible and is connected both to the sharing of information, and to the usability of a device. AI bias is a potential tendency to produce incorrect results in a systematic, but sometimes unforeseeable way, which can impact safety and effectiveness of the device within all or a subset of the intended use population (e.g., different healthcare settings, different input devices, sex, age, etc.,). A comprehensive approach to transparency and bias is particularly important for AI-enabled devices, which can be hard for users to understand due to the opacity of many models and model reliance on data correlations that may not map directly to biologically plausible mechanisms of action. Recommendations for a design approach to transparency are provided in Appendix B (Transparency Design Considerations). With regards to the control of bias for AI-enabled devices this can include addressing representativeness in data collection for development, testing, and monitoring throughout the product lifecycle, as well as evaluating performance across subgroups of intended use.

Finally, this guidance includes recommendations that address the performance of AI-enabled devices throughout the TPLC, including in the postmarket setting. For example, AI-enabled devices can be sensitive to differences in input data (also referred to as data drift), such as input data used during development as compared to input data in actual deployments. Further, in addition to data drift, which occurs when systems that produce inputs for AI-enabled devices change over times in ways that may impact the performance of the device but may not be evident to users, AI-enabled devices can also be susceptible to changes in performance due to other factors. Sponsors are also encouraged to consider the use of a predetermined change control plan (PCCP), as discussed in FDA guidance titled “Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence-Enabled Device Software Functions,” which describes an approach for manufacturers to prospectively specify and seek premarket authorization for intended modifications to an AI-DSF (e.g., to improve device performance) without needing to submit additional marketing submissions or obtain further FDA authorization before implementing such modification consistent with the PCCP.

IV. How to Use this Guidance: Overview of AI-Enabled Device Marketing Submission Content Recommendations 🔗

This guidance provides recommendations on the documentation and information that should be included in marketing submissions to support FDA’s review of devices that include AI-DSFs.

There are some differences between the way FDA and the AI community consider the AI-enabled device TPLC and certain terminology. Therefore, this guidance clarifies these differences to facilitate better understanding of the recommendations in this guidance. For example, the AI community often uses the term “validation” to refer to data curation or model tuning that can be combined with the model training phase to optimize the model selection.14 However, validation is defined in 21 CFR 820.3(z)15 as “…confirmation by examination and provision of objective evidence that the particular requirements for a specific intended use can be consistently fulfilled.” This guidance uses the definition in 21 CFR 820.3(z), specifically when addressing the evaluation of performance of the model for its intended use. For clarity, using the term “validation” to refer to the training and tuning process should be avoided in the context of medical device marketing submissions. Also, the term “development” is used throughout this guidance to refer to training, tuning, and tuning evaluation (often referred to as “internal testing” in the AI community). In this guidance, “test data” is used to refer to data that may be used for verification and validation activities, also known as the testing process, and is not used to describe part of the development process. The “FDA Digital Health and Artificial Intelligence Glossary – Educational Resource” provides a compilation of commonly used terms in the artificial intelligence and machine learning space and their definitions.

Sections V through XIII of this guidance describe the marketing submission content recommendations for AI-enabled devices. Specifically, in each section, under, “Why should it be included in a submission for an AI-enabled device,” an explanation is provided for why certain information should be included in a marketing submission.An explanation of what documentation and information should be included in a marketing submission can be found under “What sponsors should include in a submission.”Finally, recommendations regarding where sponsors should include the information within each section of a marketing submission can be found under “Where sponsors should provide it in a submission.”Information regarding recommendations for lifecycle considerations as well as examples of marketing submission materials are provided in the appendices of this guidance.

The recommendations related to marketing submissions are organized according to how they should appear in the submission (See Appendix A (Table of Recommended Documentation)), which does not always align directly with the order of activities in the TPLC. While all referenced submission sections are provided to FDA during premarket review, they include information about what has already been done to develop and validate the device, as well as what a sponsor plans to do in the future to ensure a device’s ongoing safety and effectiveness. Some sections of the guidance also describe information relevant to multiple steps in the TPLC. One example of how the sections in this guidance may align with the TPLC is included below:

- Development – Risk Assessment, Data Management, and Model Description and Development

- Validation – Data Management and Validation

- Description of the Final Device – Device Description, Model Description and Development, User Interface and Labeling, Public Submission Summary

- Postmarket Management – Device Performance Monitoring and Cybersecurity

This guidance generally describes information that would be generated and documented during software development, verification, and validation. However, the information necessary to support market authorization will vary based on the specifics of each AI-enabled device, and during premarket review FDA may request additional information that is needed to evaluate the submission.

A. Quality System Documentation 🔗

When considering the recommendations in Sections V through XIII of this guidance, it may be helpful to consider if the documentation and information that should be included in a marketing submission, under “What sponsors should include in a submission,” could exist in the Quality System documentation. One source of documentation that may be used as part of demonstrating substantial equivalence or reasonable assurance of safety and effectiveness in the marketing submission for certain AI-enabled devices is documentation related to the ongoing requirements of the Quality System (QS) Regulation.16 This guidance explains how some documentation that may be relevant for QS regulation compliance for medical devices generally can also be provided premarket to demonstrate how a sponsor or manufacturer is addressing risks associated with AI-enabled devices specifically.

For example, the QS Regulation requires that manufacturers establish design controls for certain finished devices (see 21 CFR 820.30). Specifically, as part of design controls, a manufacturer must “establish and maintain procedures for validating the device design,” which “shall ensure that devices conform to defined user needs and intended uses and shall include testing of production units under actual or simulated use conditions” (21 CFR 820.30(g)). In addition, under 21 CFR 820.30(i) a manufacturer must establish and maintain procedures to identify, document, validate or where appropriate verify, review, and approve of design changes before their implementation (“design changes”) for all devices, including those automated with software. Similarly, as part of the control of nonconforming product, manufacturers must establish and maintain procedures to “control product that does not conform to specified requirements,” including, under some circumstances, user requirements, and to implement corrective and preventative action, including “complaints” and “other sources of quality data” to identify “existing and potential causes of nonconforming product.” (21 CFR 820.90(a) and 820.100(a)(1)). Further, manufacturers have ongoing responsibility to manage the quality system and maintain device quality,17 including by reviewing the “suitability and effectiveness of the quality system at defined intervals and with sufficient frequency according to established procedures” to ensure the quality objectives are being met.18

V. Device Description 🔗

Why should it be included in a submission for an AI-enabled device: The following section describes information that sponsors should provide in the device description section of their marketing submission to help FDA understand the general characteristics of the AI-enabled device. The following recommendations supplement device-specific recommendations and recommendations provided in the Premarket Software Guidance, where applicable.

The device description supports FDA’s understanding of the intended use, expected operational sequence of the device (e.g., clinical workflow of the device), use environment, features of the model, and design of the AI-enabled device. This information is needed for FDA to evaluate the safety and effectiveness of the device. The device description provides important context about what the device does, including how it works, how a user may interact with it, and under what circumstances a device is likely to be used as intended.

For recommendations related to how to include information in the marketing submission about the technical characteristics of the model, and the method by which the model was developed, see Section IX (Model Description and Development) of this guidance.

What sponsors should include in a submission: In general, sponsors should include the following types of information as part of a device description for an AI-enabled device:

- A statement that AI is used in the device.

- A description of the device inputs and device outputs, including whether the inputs are entered manually or automatically, and a list of compatible input devices and acquisition protocols, as applicable.

- An explanation of how AI is used to achieve the device’s intended use. For devices with multiple functions, this explanation may include how AI-DSFs interact with each other as well as how they interact with non-AI-DSFs.

- A description of the intended users, their characteristics, and the level and type of training they are expected to have and/or receive. Users include those who will interpret the output. When relevant, list the qualifications or clinical role of the users intended to interpret the output. Users also include all people who interact with the device including during installation, use, and maintenance. For example, users may include technicians, health care providers, patients, and caregivers, as well as administrators and others involved in decisions about how to deploy medical devices, and how the device fits into clinical care.

- A description of the intended use environment(s) (e.g., clinical setting, home setting).

- A description of the intended workflow for the use of the device (e.g., intended decision- making role), including:

- A description of the degree of automation that the device provides in comparison to the workflow for the current standard of care;

- A description of the clinical circumstances that may lead to use; and

- An explanation of how the outputs will be used in the clinical workflow.

- A description of installation and maintenance procedures.

- A description of any calibration and/or configuration procedures that must be regularly performed by users in order to maintain performance, including when calibration must be performed and how users can identify if calibration is needed again or is incorrect, as applicable.

Additionally, sponsors should include the following types of information as part of a device description for an AI-enabled device that has elements that can be configured by a user:

- A description of all configurable elements of the AI-enabled device, for example:

- Visualizations that the user can turn on/off (e.g., overlays, quality indicators, or heatmaps);

- Software inputs;

- Model parameters when they are configured during use; and/or

- Alert thresholds.

- A description of how these elements and their settings can be configured, including: oA description of the users who make configuration decisions (e.g., clinical user, administrative user, patient) including any necessary qualifications and training needed to make these decisions, as applicable;

- An explanation of how users know which selections have been made;

- A description of the level at which the configuration is defined, for example at the patient-, clinical site- or hospital network-level; and

- A description of customizable pre-defined operating points, their outputs and performance ranges, as applicable. It is also important to specify how the operating points or operating point range(s) were selected based on the indications for use of the device.

- A description of the potential impact of the configurable elements on user decision making.

Finally, if a device contains multiple connected applications with separate interfaces, the device description should address all applications in the device. For example, if there is an application for patients, an application for caregivers, and a data portal for healthcare providers, the device description should include details on all functions across the applications and address how they are connected. Sponsors may also wish to consider enhancing the device description with the use of graphics, diagrams, illustrations, screen captured images, or video demonstrations, including screen captured video. For more information on how to share elements of the user interface in the marketing submission, see Section VI.A (User Interface).

Where sponsors should provide it in a submission: The AI-enabled device description information should be included in the “Device Description” section of the marketing submission.

VI. User Interface and Labeling 🔗

The user interface includes all points of interaction between the user and the device, including all elements of the device with which the user interacts (e.g., those parts of the device that users see, hear, touch). It also includes all sources of information transmitted by the device (including packaging and labeling19), training, and all physical controls and display elements (including alarms and the logic of operation of each device component and of the user interface system as a whole), as applicable. A user interface might be used throughout many phases of installation and use, such as while the user sets up the device (e.g., unpacking, set up, calibration), uses the device, or performs maintenance on the device (e.g., cleaning, replacing a battery, repairing parts).20 One way to help support the safety and effectiveness of the device for users is to design the user interface such that important information is provided throughout the course of use, to ensure that the device conforms to defined user needs.21 An approach that integrates important information throughout the user interface may help ensure that device users have access to information at the right time and in the right location to support safe and effective use, consistent with the intended use of the device. For software or mobile applications, manufacturers may leverage the user interface elements, such as information on the screen or alerts sent to other products, in addition to device labeling, to communicate risks about the device so that the necessary information is provided at the right time.

It is important to provide a holistic understanding of the user interface in a marketing submission to support the agency’s understanding of how the device works. If a sponsor references the user interface design in their risk analysis or another section of the submission to control risks, inclusion of the user interface may also support explanations of those risk controls. However, the actual analysis of the efficacy of risk control should be located separately from the description of the user interface. Further information on this topic is described in Section VII (Risk Assessment) and Appendix D (Usability Evaluation Considerations).

With regard to labeling specifically, a device user interface includes, but is not limited to, labeling. Further, within the user interface, labeling is subject to specific regulations. For example, depending on whether the device is for prescription-use or not, manufacturers are required to provide labeling containing adequate directions for use that would ensure that a layman or, for prescription devices, a practitioner licensed by law to administer the device, “can use a device safely and for the purposes for which it is intended.”22 One way to satisfy these requirements for AI-enabled devices could be to provide, in the labeling, clear information about the model, its performance characteristics, and how the model is integrated into the device. For example, users may need to know specific information about the model, such as the nature of the data on which the model was trained. These technical characteristics can be critical to the safe and effective use of the device because they can support a user’s understanding of how the device should be expected to perform, and what factors may impact performance.

The following sections further detail recommended information on the user interface (Section VI.A), and the labeling (Section VI.B), that should be provided in a marketing submission to support FDA’s understanding of what is communicated to users and the elements of the device with which the users interact.

Appendix B (Transparency Design Considerations) of this guidance outlines a recommended approach to transparency, including examples of types of information, modes of communication, and communication styles that may be helpful to consider when designing the user interface (including labeling) of an AI-enabled medical device. It may also be helpful to integrate a model card in the device labeling to clearly communicate information about an AI-enabled device (see Appendix E (Example Model Card)).

Note that inclusion of a unique device identifier (UDI) in the labeling is required for devices, including AI-enabled devices, that are subject to UDI requirements.23 A new UDI is required when there is a new version and/or model, and for new device packages.24 See FDA’s website on for more information.

A. User Interface 🔗

Why should it be included in a submission for an AI-enabled device: It is important for FDA to understand the device’s user interface, in order to understand how the device is used. The user interface can convey important information about what the device is intended to do, and how users are intended to interact with it. Seeing the user interface can help FDA understand how the device will be operated and how it will fit into the clinical workflow, which can support the review of a device and help the agency determine whether it is safe and effective.

A representation of the user interface can also serve to support the sponsor’s risk assessment and other documentation when the user interface is referenced as an element of those sections. For example, the user interface can communicate important information to users that supports safe and effective use of the device, and the user interface design may play a crucial role in controlling or eliminating risks associated with not knowing or misunderstanding information that is critical to the safe and effective use of the device. While not required, if a sponsor chooses to use elements of the user interface as part of risk control in the risk assessment, the inclusion of the user interface can help further facilitate review. Further information on this topic is described in Section VII. (Risk Assessment) and Appendix D (Usability Evaluation Considerations).

While the user interface does include the printed labeling (e.g., packaging and user manuals) and all elements of the user interface should be designed to collectively support the user’s understanding of how to use the device, sponsors should submit labeling separately as described in Section VI.B (Labeling). This section describes how sponsors should provide FDA with an understanding of the remaining elements of the user interface.

What sponsors should include in a submission: Sponsors should provide information about and descriptions of the user interface that makes clear the device workflow, including the information that is provided to users, when the information is provided, and how it is presented. Possible methods to provide this type of information about the user interface include:

•A graphical representation (e.g., photographs, illustrations, wireframes, line drawings) of the device and its user interface. This may include a depiction of the overall device and all components of the user interface with which the user will interact (e.g., display and function screens, alarm speakers, controls).

- A written description of the device user interface.

- An overview of the operational sequence of the device and the user’s expected interactions with the user interface. This may include the sequence of user actions performed to use the device and resulting device responses, when appropriate.

- Examples of the output format, including example reports representing a range of expected outcomes.

- A demonstration of the device, for example by providing a recorded video.

Where sponsors can provide it in a submission: The user interface information should be included in the “Software Description” in the Software Documentation section of the marketing submission.

B. Labeling 🔗

Why should it be included in a submission for an AI-enabled device: A marketing submission must include labeling information in sufficient detail to help FDA determine that the proposed labeling satisfies applicable requirements for the type of marketing submission.25 Device labeling must satisfy all applicable FDA labeling requirements, including, but not limited to, 21 CFR Part 801, as discussed above.26 This section of the guidance includes labeling considerations for AI-enabled devices to support compliance with these requirements.

What sponsors should include in a submission: The labeling for an AI-enabled device should address the following types of information in a format and at a reading level that is appropriate for the intended user (e.g., considering characteristics such as age, education or literacy level, sensory or physical impairments, or occupational specialty) to help ensure users can quickly access important information. Tables and graphics may be used to communicate this information.

Inclusion of AI

- Statement that AI is used in the device.

- Explanation of how AI is used to achieve the device’s intended use.

- For devices with multiple functions, this explanation may include how AI-DSFs interact with each other as well as how they interact with non-AI DSFs.

Model Input

- Description of the model inputs (e.g., signals or patterns acquired from other compatible devices, images from an acquisition system (e.g., MRI), or patient-derived samples, which can be input manually or automatically). Related aspects to consider include:

- For systems incorporating inputs from an electronic interface, information on the necessary system configuration to ensure the inputs are consistent with the design and validation of the AI-enabled device.27

- For systems that require input from other medical devices (e.g., an x-ray device), a list of the specific compatible devices or device specification, along with the acceptable acquisition protocols, as applicable.

- For systems in which the loss of model inputs may prevent the AI-enabled device from generating an output, an explanation of the potential impact of the lost inputs on the performance of the AI-enabled device.

- Instructions on any steps the user is expected to take to prepare input data for processing by the device, including any expected characteristics (e.g., functional capabilities, experience and knowledge levels, and level of training) of those performing these steps. This information should be consistent with the intended use that was studied in the device validation.

Model Output

- Explanation of what the model output means and how it is intended to be used.

Automation

- Explanation of the intended degree of automation the device exhibits.

Model Architecture

- High level description of the methods and architecture used to develop the model(s) implemented in the device.

Model Development Data

- Description of the development data, including: oThe source(s) of data;

- Study sites;

- Sample size;

- Demographic distributions; and

- Criteria/expertise used for determining clinical reference standard (ground truth).

Performance Data

- Description of the performance validation data, including:

- The source(s) of data;

- Study sites;

- Sample size;

- Other important study design and data structure information (e.g., randomization schemes, repeated measurements, clinical reference standard);

- Primary endpoints of the validation study, including pre-specified performance criteria; and

- Criteria/expertise used for determining clinical reference standard data.

Device Performance Metrics

- Description of the device performance metrics.

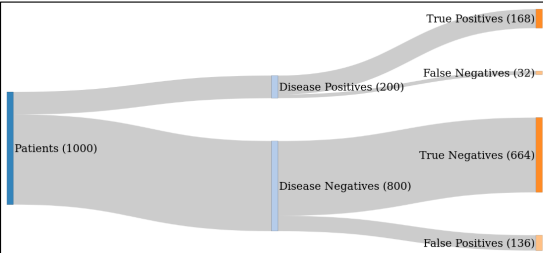

- An example of performance metrics may include metrics such as the area under the receiver operating characteristic curve (AUROC), sensitivity and specificity, true/false positive and true/false negative counts (e.g., in a confusion matrix), positive/negative predictive values (PPV/NPV), and positive/negative diagnostic likelihood ratios (PLR/NLR). All performance estimates should be provided with confidence intervals.

- Explanation of the device performance across important subgroups. Generally, subgroup analysis by patient characteristics (e.g., sex,28 age, race, ethnicity,29 disease severity), geographic sites, and data collection equipment are appropriate.

- Description of the corresponding performance for different operating points, including subgroup analysis for each operating point, as applicable.

Performance Monitoring

- Description of any methods or tools to monitor and manage device performance, including instructions for the use of such tools, as applicable when ongoing performance monitoring and management by the user is considered necessary for the safe and effective use of the device.

Limitations

- Description of all known limitations of the AI-enabled device, AI-DSF(s), or model(s).

- Some limitations of a model may not reach the degree of severity that would warrant a contraindication, warning, or precaution, but they may still be important to include in labeling. For example, the training dataset may have only included a few patients with a rare presentation of a disease or condition; users may benefit from knowing the limitations of the data when that rare presentation is suggested by the model as a diagnosis.

Installation and Use

- Information about the installation and implementation instructions, including:

- Instructions on integrating the AI-enabled device into the site’s data systems and clinical workflow; and

- Instructions for ensuring that any input data are compatible and appropriate for the device.30

- Terms may need to be explicitly defined. For example, a healthcare system and a manufacturer may both have data labeled as “sex,” but one may be using sex at birth while the other may be using self-reported sex.

Customization

- Description of and instructions on any customizable features, including:

- When users or healthcare systems can configure the operating points for the device;

- When it is appropriate to select different configurations; and

- When operating points are configurable, how end users can discern the operating point the device is currently operating at.

Metrics and Visualizations

- Explanation of any additional metrics or visualizations used to add context to the model output.

Patient and Caregiver Information

For AI-enabled devices intended for use by patients or caregivers, manufacturers should provide labeling material that is designed for patients and caregivers describing the instructions for use, the device’s indication, intended use, risks, and limitations. Patients and caregivers are considered users if they will operate the device, interpret the outcome, or make decisions based on the outcome, even if they are not the only user or the primary operator of the device. This material should be at an appropriate reading level for the intended audience. If patient and caregiver-specific material is not provided, sponsors should provide an explanation of how patients and caregivers will understand how to use the device, including how to make decisions about whether to use the device and how to use the output of the device.

Where sponsors should provide it in a submission: Information regarding the AI-enabled device labeling should be included in the “Labeling” section of themarketing submission.

|

ADDITIONAL RESOURCES: • Appendix B (Transparency Design Considerations) outlines a potential approach to understanding a device’s indications for use and a model card, which may aid in the development of the user interface. • While model cards are not required for presenting information about the labeling or user interface, they may be a helpful tool to organize information. In general, model cards can be adapted to the specific needs and context of each AI-enabled device. ◦ Appendix E (Example Model Card) includes an example of a basic model card format intended for users and healthcare providers that conveys information including a summary of the model’s intended use and intended users, and evidence supporting safety and effectiveness. ◦ Appendix F (Example 510(k) Submission Summary with Model Card) includes an example of a completed basic model card. • FDA’s guidance titled “Device Labeling Guidance #G91-1 (Blue Book Memo)” includes suggestions regarding what information should be included within device labeling. |

VII. Risk Assessment 🔗

Why should it be included in a submission for an AI-enabled device: A comprehensive risk assessment helps ensure the device is safe and effective. When included in a marketing submission, a comprehensive risk assessment helps FDA understand whether appropriate risks have been identified and how they are controlled. In Section VI.C of the Premarket Software Guidance, FDA recommends that marketing submissions that include device software Functions include a risk management file composed of a risk management plan, a risk assessment, and a risk management report. Consistent with this, marketing submissions of AI-enabled devices should include a risk management file that takes into account the recommendations of Premarket Software Guidance and the recommendations of this guidance, in addition to any other applicable guidance.

Sponsors should also refer to the FDA-recognized version of ANSI/AAMI/ISO 14971 Medical devices - Applications of risk management to medical devices for additional information on the development and application of a risk management file, which is also applicable to AI-enabled devices. FDA also recognizes that AI-enabled devices can be associated with new or different risks than device software functions generally. Therefore, FDA also recommends that sponsors incorporate the considerations outlined in the FDA-recognized voluntary consensus standard of AAMI CR34971 Guidance on the Application of ISO 14971 to Artificial Intelligence and Machine Learning, which is specific to AI-enabled devices.

Risks Across the TPLC

When conducting a risk analysis, the Medical Devices; Current Good Manufacturing Practice (CGMP), final rule (Oct. 7, 1996, 61 FR 52602) states “manufacturers are expected to identify possible hazards associated with the design in both normal and fault conditions. The risks associated with the hazards, including those resulting from user error, should then be calculated in both normal and fault conditions. If any risk is judged unacceptable, it should be reduced to acceptable levels by the appropriate means.” This risk assessment should take into account all users, as described in Section VI (User Interface and Labeling) of this guidance, across the TPLC. FDA recommends that manufacturers follow this approach for AI-enabled devices across their TPLC.

Risks Related to Information in AI-Enabled Devices

One aspect of risk management that can be particularly important for AI-enabled devices is the management of risks that are related to understanding information that is necessary to use or interpret the device, including risks related to lack of information or unclear information. Misunderstood, misused, or unavailable information can impact the safe and effective use of a device. For example, for devices that utilize complex algorithms, including AI-enabled devices, the performance in different disease subtypes may not be apparent to users, or the logic underlying the output information may not be easily understandable, which can negatively affect user understanding and use of the device. Lack of, or unclear information can also make it difficult for different users to understand whether a device is not performing as expected, or how to correctly follow instructions. FDA recommends that consideration of risks related to understanding information should be one part of a comprehensive approach to risk management for an AI-enabled device.

|

ADDITIONAL RESOURCES: • ANSI/AAMI HE75 Human factors engineering - Design of medical devices includes recommendations on using information in labeling to help control risks. |

What sponsors should include in a submission: Sponsors should provide a “Risk Management File” that includes a risk management plan, including a risk assessment. In addition to other considerations, the risk assessment should consider user tasks and knowledge tasks that occur throughout the full continuum of use of the device, including, for example, the process of installing the device, maintaining performance over time, and any risks associated with user interpretation of the results of a device, as appropriate.

In addition to the considerations provided in FDA-recognized voluntary consensus standards31 and applicable guidances,32 FDA recommends that sponsors consider the risks related to understanding information during the risk assessment. As with all identified risks, sponsors should provide an explanation of any risk controls, including elements of the user interface, such as labeling, that address the identified risks. Information that may be helpful to discuss such risks and their controls, as applicable, is provided in Appendix D (Usability Evaluation Considerations).

Where sponsors should provide it in a submission: Much of the information on risk assessment for an AI-enabled device should be included in the “Risk Management File” in the Software Documentation section of the marketing submission, as recommended by the Premarket Software Guidance.

|

ADDITIONAL RESOURCES: • Appendix B (Transparency Design Considerations) outlines recommendations for a user-centered design approach to developing a device, which may aid in the identification of risks and development of risk controls. • Appendix D (Usability Evaluation Considerations) provides recommendations on usability testing, which may help sponsors evaluate the efficacy of proposed controls for information related risks. |

VIII. Data Management 🔗

Why should it be included in a submission for an AI-enabled device: For an AI-enabled device, the model is part of the mechanism of action. Therefore, a clear explanation of the data management, including data management practices (i.e., how data has been or will be collected, processed, annotated, stored, controlled, and used) and characterization of data used in the development and validation of the AI-enabled device is critical for FDA to understand how the device was developed and validated. This understanding helps to enable FDA’s evaluation of an AI-enabled device’s safety and effectiveness.

The performance and behavior of AI systems rely heavily on the quality, diversity, and quantity of data used to train and tune them. The accuracy and usefulness of a validation of an AI-enabled device also depends on the quality, diversity, and quantity of data used to test it. Thus, FDA reviewers evaluate data management in order to understand whether an AI-enabled device is safe and effective. This includes the alignment of the collection and management of training and test data with the intended use and resulting device requirements.

Data management is also an important means of identifying and mitigating bias. The characterization of sources of bias is necessary to assess the potential for AI bias in the AI-enabled device. AI bias is a potential tendency to produce incorrect results in a systematic, but sometimes unforeseeable, way due to limitations in the training data or erroneous assumptions in the machine learning process. AI bias has been well-documented.33 For example, during training, models can be over-trained to recognize features of images that are unique to specific scanners, patient subpopulations, or clinical sites but have little to do with generalizable patient anatomy, physiology, or condition, which can lead to AI bias in the resulting model. In another example, underrepresentation of certain populations in datasets could lead to overfitting (i.e., data fitting too closely to the potential biases of the training data) based on demographic characteristics, which can impact the AI-enabled device performance in the underrepresented population.

Using unbiased, representative training data for models promotes generalizability to the intended use population and avoids perpetuating biases or idiosyncrasies from the data itself. For example, in image recognition tasks, confounding may occur when all the diseased cases are imaged with the same instrument, or with a ruler included (e.g., on clinical images of melanoma). Another example of a potential confounding factor is the use of data collected outside the U.S. (OUS) in training, which may bias the model if the OUS population does not reflect the U.S. population due to differences in demographics, practice of medicine, or standard of care. Such confounders in the training data, if not identified and mitigated, can be inadvertently learned by a model, leading to seemingly accurate (but misleading) predictions based on irrelevant characteristics.

The inclusion of representative data in validation datasets may be important, because underrepresentation may impact the ability to identify any performance problems, including understanding performance in underrepresented populations. Although bias may be difficult to eliminate completely, FDA recommends that manufacturers, as a starting point, ensure that the validation data sufficiently represents the intended use (target) population of a medical device. For more information regarding age-, race-, ethnicity-, and sex-specific data please see the FDA guidances titled, “Collection of Race and Ethnicity Data in Clinical Trials and Clinical Studies for FDA-Regulated Medical Products”34 “Evaluation and Reporting of Age-, Race-, and Ethnicity-Specific Data in Medical Device Clinical Studies,” and “Evaluation of Sex-Specific Data in Medical Device Clinical Studies.”

If the same confounders are found in the validation data as the development data, it may be particularly difficult to identify the spurious correlations that appear to be leading to correct predictions. Therefore, information about the representativeness of the datasets used in the development and validation of the AI-enabled device is important to help FDA determine substantial equivalence or if there is a reasonable assurance that the device is safe and effective for its intended use.35 Beyond addressing AI bias, the details of the data management should support the intended use of the device.

To objectively assess the device performance, it is also important for FDA reviewers to understand whether the test data are independent (e.g., sampled from completely different clinical sites) from the training data and are sequestered from the model developers and the model development stage. Appropriate separation of the development and test datasets can help with evaluating the true performance of an AI-enabled device. Data leakage between the validation and development datasets can create uncertainty regarding the true performance of the AI-enabled device.36

What sponsors should include in a submission: In a submission, a sponsor should provide the following types of information for both the training and testing data, in the appropriate marketing submission sections. It may be helpful to organize data management information by the sections described below. Generally, information on data collection, development and test data independence, reference standards, and representativeness should be provided. Sponsors should also explain any differences in the data management approach and the characteristics of the data between the development and validation phases. The submission should include an explanation for the differences and justification for them.

Data Collection

- A description of how data were collected (e.g., clinical study protocols with inclusion/exclusion criteria), including:

- The names of clinical sites or institutions involved.

- Sites should be uniquely identified, and they should be referred to consistently throughout the submission.

- The time period during which the data were acquired.

- If data were used from a pre-existing database, the appropriateness of the use of this database.

- If real-world data (RWD) are used, the source and collection of this evidence.

- If RWD are used, FDA recommends that sponsors provide an assessment of fit-for-purpose data for the selected data source(s) that evaluates both the relevancWD. FDA encourages sponsors to leverage the Q-Submission Program for obtaining FDA feedback on proposed uses of RWD. For more information regarding RWD, please see the FDA guidance titled “Use of Real-World Evidence to Support Regulatory Decision-Making for Medical Devices.”

- The names of clinical sites or institutions involved.

- A description of the limitations of the dataset.

- A description of the quality assurance processes related to the data, including the controls that were put in place to protect from human error during data acquisition, when applicable.

- A description of the size of each data set.

- A description of the mechanisms used to improve diversity in enrollment within the scope of the study, and how they ensure the generalizability of study results across patient populations and clinical sites.37 For more information on this topic, please see FDA guidance titled “Collection of Race and Ethnicity Data in Clinical Trials.”

- A description of the use of synthetic data.38 Synthetic data used in support of a regulatory submission should be accompanied by a comprehensive explanation of how the data were generated and why they are fit-for-purpose.

Data Cleaning/Processing

To provide optimum training results, it may be important to clean data used for development, such as by removing incorrect, duplicate, or incomplete data. These processing steps should be described, including data quality factors used, data inclusion/exclusion criteria, treatment of missing data, and whether the steps are internal or external to the AI-DSF.

Testing data, on the other hand, should only be processed in a manner that is representative of the RWD the model will encounter in its intended use. Any such data processing, data quality factors used, data inclusion/exclusion criteria, and treatment of missing data should be justified as aligned with pre-processing implemented in the final AI-DSF.

Reference Standard

For the purposes of this guidance, a reference standard is the best available representative truth that can be used to define the true condition for each patient/case/record.39 It is possible that a reference standard may be used in device training, device validation, or both. A reference standard is validated by evidence from current practice within the medical and regulatory communities for establishing a patient’s true status with respect to a clinical task. The reference standard should reflect the clinical task. Clinical tasks may consist of, for example, classification of a disease or condition, segmentation of contours on medical images, detection by bounding boxes, or localization by markings. The following types of information should be provided regarding the selected reference standard:

- A description of how the reference standard was established.

- A description of the uncertainty inherent in the selected reference standard.

- A description of the strategy for addressing cases where results obtained using a reference standard may be equivocal or missing.

- If the reference standard is based on evaluations from clinicians, provide:

- The grading protocol used.

- What data are provided to these clinicians.

- How the clinicians’ evaluations are collected/adjudicated for determining the clinical reference standard, including:

- blinding protocol; and

- number of participating clinicians and their qualifications.

- An assessment of the intra- and/or inter-clinician variability for each task, as applicable, as well as an assessment on whether the observed variability is within commonly accepted standards for a particular measurement task.

Data Annotation

- When data annotation is used, the following types of information should be provided regarding the data annotation approach:

- A description of the expertise of those performing the data annotation.

- A description of the specific training, instructions or guidelines provided to data annotators to guide their annotation decisions, including whether annotators are blinded to each other.

- A description of the methods for evaluating quality/consistency of data annotations and adjudicating disagreements (consensus evaluation, sampling). FDA recommends the use of independent assessments by each annotator, without knowledge of the other annotators’ decisions, to ensure objective high-quality data annotations; and

- A detailed plan for addressing incorrect data annotation.

Data Storage

A description of the data storage of both training and test data. The description should address dataset version control and should ensure the security of the data by addressing the items described in Section XII (Cybersecurity) of this guidance.

Management and Independence of Data

- A description of the development data, including how the development data were split into training, tuning, tuning evaluation, and any additional subsets, and specification of which model development activities were performed using each dataset.

- A description of the controls in place to ensure the data used for testing is sequestered from the development process.

- A justification of why the data used for validation provides a robust external validation.

For example, a description of the sites from which test data originates from, because, in general, test data should come from sites different from those used to develop the AI-DSF.

Representativeness

- An explanation of how the data is representative of the intended use population40 and indications for use, including:

- A description of the relevant population characteristics, when available, including:

- Disease conditions (e.g., positive/negative cases, disease severity, disease subtype, comorbidities, distribution of the disease spectrum);

- Patient population demographics (e.g., sex,41 age, race, ethnicity,42 height, weight);

- Data acquisition equipment and conditions (e.g., locations at which data are collected, data acquisition devices/methods, imaging and reconstruction protocols), including any factors that may impact signals analyzed during data acquisition (e.g., patient activities, such as whether a patient is ambulatory, resting, standing; or data acquisition environments, such as intensive care unit, MRI); and

- Test data collection sites (e.g.,clinical sites, institutions). Generally, while a single data collection site may be a useful starting place during initial data assessment phases, reliance on a single site is generally not appropriate for understanding whether the data are representative of the intended use population and indications for use. The use of multiple data collection sites, such as sites in diverse clinical practice settings (e.g., large academic hospital vs. community hospital) may assure a more representative sample of the intended use population. For example, the use of at least three geographically diverse US clinical sites (or health care systems) may be appropriate to clinically validate an AI-enabled device.44 oA characterization of the distribution of data along important covariates, including those corresponding to the population characteristics described above.

- If any of the relevant population characteristics above were not available for the data, an explanation of why, and a justification of the use of the data without this information. FDA understands that, depending on the source of the patients and/or samples used in the training and test data, some relevant patient characteristic information may not be available.

- A subgroup analysis or analyses stratified by the identified covariates.

- If OUS data are used during validation, an explanation regarding how the data compares to the U.S. population and U.S. medical practice in terms of general medical practice, disease presentation, prevalence, and progression as well as the demographic characteristics of patients.43

- Due to the data-driven nature of typical models and the obscurity of their algorithms to end users, their generalized performance on the U.S. target population may not be adequately captured in the clinical study if a significant portion of the validation data are OUS data. AI-enabled devices may also be more sensitive than traditional medical devices to the idiosyncratic patterns in the training or test data. For these reasons, they may require higher proportion of U.S. data in the clinical validation. FDA encourages sponsors to leverage the Q-Submission process for obtaining FDA feedback on proposed uses of OUS data.44

- A description of the relevant population characteristics, when available, including:

Where sponsors should provide it in a submission: The data management information for data used in the development of the model should be included in the “Software Description” in the Software Documentation section of the marketing submission, as described in the Premarket Software Guidance.

The data management information for data used in the performance validation (i.e., clinical validation) documentation should be included in the “Performance Testing” section of the marketing submission. When the characteristics of data used for model training and validation differ, sponsors should highlight and justify the differences along with the performance validation data management section in the performance testing documentation element.

|

ADDITIONAL RESOURCES: In addition to the considerations in this guidance, to support the TPLC approach to development, FDA recommends that sponsors and investigators consider the unique characteristics of the AI-enabled device during the study design, conduct, and reporting phases for clinical investigations. Researchers should understand how Investigational Device Exemption (IDE), Protection of Human Subjects and Institutional Review Board regulations,47 and Good Clinical Practice (GCP) regulations48 apply to their devices. Resources include consensus guidelines,49 as well as FDA guidances titled: • “Significant Risk and Nonsignificant Risk Medical Device Studies” • “Informed Consent Guidance for IRBs, Clinical Investigators, and Sponsors” • “Acceptance of Clinical Data to Support Medical Device Applications and Submissions: Frequently Asked Questions” For more information regarding age-, race-, and ethnicity-specific data, and sex-specific data please see the FDA guidances titled: • “Collection of Race and Ethnicity Data in Clinical Trials” • “Evaluation and Reporting of Age-, Race-, and Ethnicity-Specific Data in Medical Device Clinical Studies” • “Evaluation of Sex-Specific Data in Medical Device Clinical Studies” |

IX. Model Description and Development 🔗

Why should it be included in a submission for an AI-enabled device: Information about the model (and device) design, including its biases and limitations, supports FDA’s ability to assess the safety and effectiveness of an AI-enabled device and determine the device’s performance testing specifications.

Section VI.B of the Premarket Software Guidance describes information that should be included as part of a software description in a marketing submission, including the model description. Whereas the device description is broader and provides information about the whole device, how users interact with it, and how it fits into the clinical workflow, the model description, as part of the software description, specifically provides detailed information about the technical characteristics of the model(s) themselves and the algorithms and methods that were used in their development. This information helps FDA understand the basis for the functionality of an AI-enabled device. Understanding the methods used to develop the model also helps FDA identify potential limitations, sources of AI bias, and considerations for appropriate device labeling.

What sponsors should include in a submission: In a submission, sponsors should include the information described below for each model in the AI-enabled device.

In situations where multiple models are employed as part of the AI-enabled device, it can be particularly helpful to include a diagram of how model outputs combine to create the device outputs. The description of the algorithms and models should be sufficiently detailed to enable a competent AI practitioner to produce an equivalent model. The use of diagrams in addition to textual descriptions is encouraged to enhance clarity.

Model Description

- An explanation of each model used as part of the AI-enabled device, including but not limited to:

- Model inputs and outputs;

- A description of model architecture;

- A description of features;

- A description of the feature selection process and any loss function(s) used for model design and optimization, as appropriate; and

- Model parameters.

- In situations where the AI-enabled device has customizable features involving the model, such as being customizable to operate at multiple pre-defined operating points or with a variable number of inputs, a description of the technical elements of the model that allow for and control customization.

- A description of any quality control criteria or algorithms, including AI-based and third-party ones, for the input data, including how the quality assessment metrics align with the intended use of the device (e.g., intended patient population and use environment).

- A description of any methods applied to the input and/or output data, including: Pre-processing of input data (e.g., normalization);

- Post-processing of output data; and

- Data augmentation or synthesis.

Model Development

- A description of how the model was trained, including but not limited to:

- Optimization methods;

- Training paradigms (e.g., supervised, unsupervised or semi-supervised learning, federated learning, active learning);

- Regularization techniques employed;

- Training hyperparameters (e.g., the loss function learning rate) as applicable; and

- Summary training performance such as the loss function convergence curves for the different data subsets (such as training, tuning, tuning evaluation).

- If tuning evaluation was conducted, a description of the metrics and results obtained.

- An explanation of any pre-trained models that were used, as applicable.

- If a pre-trained model was used, specify the dataset that was used for pre-training and how the pre-trained model was obtained.

- A description of the use of ensemble methods (e.g., bagging or boosting), as applicable.

- An explanation of how any thresholds (e.g., operating points) were determined.

- An explanation of any calibration of the model output.

Where sponsors should provide it in a submission: Information on model development, including the model description, and the method for model development, should be included as part of the “Software Description” in the Software Documentation section of the marketing submission, as described in the Premarket Software Guidance.

| ADDITIONAL RESOURCES: In situations where manufacturers wish to considerthat automatically or continuously update, FDA encourages manufacturers to use the Q-Submission Program to discuss considerations related to these AI models early in the development process and review the FDA “Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence-Enabled Device Software Functions.” |

X. Validation 🔗

For an AI-enabled device, validation includes ensuring that the device, as utilized by users, will perform its intended use safely and effectively, as well as establishing that the relevant performance specifications of the device can be consistently met. For AI-enabled devices, manufacturers should demonstrate users’ ability to interact with and understand the device as intended in addition to ensuring the device itself meets relevant performance specifications. To this end, it can be helpful to consider both performance validation (including human factors validation) and an evaluation of usability. Note that, for the purposes of this guidance (in the context of risk controls in the absence of human factors validation), usability describes whether the device can be used safely and effectively by the intended users, including whether users consistently and correctly receive, understand, interpret, and apply information related to the AI-enabled device.

The FDA guidance titled “Applying Human Factors and Usability Engineering to Medical Devices” (hereafter referred to as “Human Factors Guidance”), describes recommendations and requirements for devices and establishes that human factors validation testing encompasses, “all testing conducted at the end of the device development process to assess user interactions with a device user interface to identify use errors that would or could result in serious harm to the patient or user,” and is also used “to assess the effectiveness of risk management measures.” While the Human Factors Guidance outlines specific recommendations and requirements for human factors validation for devices that have critical tasks, the application of the same or a similar process can also be helpful to demonstrate the appropriate control of other risks. Appendix D (Usability Evaluation Considerations) includes recommendations to help sponsors understand when usability testing may help support the control of risks. The appendix also includes recommendation to help sponsors develop and describe certain types of usability testing in addition to human factors validation, or when human factors validation is not required. The appendix supplements device-specific recommendations and recommendations provided in the Human Factors Guidance where applicable.

Together, performance validation and human factors validation (or an evaluation of usability as appropriate) help provide FDA with information to understand how the device may be used and perform under real world circumstances. Performance validation may employ a variety of testing and monitoring methods to evaluate the statistical performance of the model under testing conditions, and human factors validation testing involves understanding how various users are likely to use a device in context. In other words, performance validation is meant to provide confirmation that device specifications conform to user needs and intended uses, and that performance requirements implemented can be consistently fulfilled, while human factors validation and an evaluation of usability are meant to specifically address whether all intended users can achieve specific goals while using the device and whether users will be able to consistently interact with the device safely and effectively.

Software Version History

Section VI.I of the Premarket Software Guidance describes information that should be included as part of a software description in a marketing submission, including information regarding the software version history. For AI-enabled devices, the software version history includes consideration of the model version and any differences between the tested version of the model and the released version, along with an assessment of the potential effect of the differences on the safety and effectiveness of the device. It is important for FDA to understand what version of the model was tested in order to ensure that all validation activities will be objective, and the model has not been adjusted opportunistically in light of the test data (i.e., post-hoc adjustment) without the Agency’s concurrence.

New unique device identifiers (UDIs) are required for devices that are required to bear a UDI on its label when there is a new version and/or model, and for new device packages.45

A. Performance Validation 🔗

Why should it be included in a submission for an AI-enabled device: The performance validation for an AI-enabled device provides objective evidence that the device performs predictably and reliably in the target population according to its intended use. The following recommendations are intended to supplement device-specific recommendations and recommendations provided in other FDA guidances where applicable, including “Design Considerations for Pivotal Clinical Investigations for Medical Devices,” “Statistical Guidance on Reporting Results from Studies Evaluating Diagnostic Tests,” and “Electronic Source Data in Clinical Investigations.”

As part of FDA’s evaluation of safety and effectiveness of the device, it is important for FDA to understand how the device performs overall in the intended use population, as well as in subgroups of interest. Acceptable performance in certain subgroups may mask lower performance in other subgroups when the evaluation is performed only for the total population. Poor performance in specific subgroups could make the device unsafe for use in those groups, which may impact the potential scope of the intended use population. Section VIII (Data Management) outlines why stratification and analyses of subgroups of interest is important to FDA’s evaluation of safety and effectiveness. An analysis of subgroup performance that supports safe and effective use across the expected intended use population also helps to ensure that devices can be used for all intended patients.

While differential performance across subgroups is not unique to AI-enabled devices, the reliance of models on relationships learned from large amounts of data, and the relative opacity of models to users make AI-enabled devices particularly susceptible to unexpected differences in performance. Even when the data used to develop the model is representative during training, models can be over-trained to recognize features of data that are unique to specific characteristics of the study dataset but may be spurious to the identification or treatment of the disease or condition. Spurious learnings could impact performance differentially across characteristics of interest such as disease subtype or patient demographics, especially when data from study participants from different groups tend to be collected at different sites. For example, models may erroneously use demographic information, or another variable corelated with demographic information, as a variable of interest in the model because patients of one demographic tended to be more likely to have a disease in the training data set. This can be particularly difficult to identify with complex models in which the variables of interest may not be understandable to humans. For this reason, the accuracy and usefulness of an evaluation of an AI-enabled device also depends on the quality, diversity, and quantity of data used to test it.

Subgroup analysis provides the tools to evaluate the performance of the device in specific populations and can be helpful in identifying scenarios in which the device performs worse than overall performance. In addition, subgroup analyses are helpful in identifying potential limitations of the device and can contribute to effective labeling by providing end users with additional useful information.

Information on the uncertainty of device outputs is also important because it helps reviewers understand how to interpret device outputs. When not specified for a device type in statute, regulation, or guidance, repeatability and/or reproducibility studies can still help FDA understand and quantify the uncertainty associated with device outputs when provided.