Background 🔗

AI/ML-enabled software devices cleared via 510(k) span several FDA product codes and regulations, each with specific testing expectations. We distilled patterns from 26 recent clearances across multiple radiology AI product codes and cross‑checked the main FDA guidances. Below is a “quick” reference guide grouping the example clearances by their product code/regulation, summarizing required testing and the rationale behind it.

Executive Overview 🔗

| 892.2050 | QIH | Automated image processing and analysis tools (e.g., measurement, segmentation, enhancement) | Software V&V,Technical Performance Assessment,Clinical Evaluation (depends) | % error,Dice,ICC | No direct diagnosis but support care with quantitative outputs—FDA prioritizes analytical validation to ensure they are accurate, reliable, and at least as effective as manual methods, enabling clinicians to trust and act on the results. |

| 892.2070 | MYN | CADe (contours, bounding boxes, etc.) | Software V&V,Standalone performance,Clinical Evaluation (MRMC) | Sensitivity,Specificity,Dice,ΔAUC,ROC plot | CADe tools influence diagnosis, FDA requires both strong algorithm accuracy and reader studies to show they help clinicians, ensuring the device is reliable, effective, and substantially equivalent to its predicate. Ground truth is typically radiologist consensus. |

| 892.2080 |

QAS QFM |

CADt triage and notification | Software V&V,Standalone performance,Clinical Evaluation (Effective Triage) | Sensitivity,Specificity,Time-to-Notification | Triage devices address the risk of delayed diagnosis in emergencies. Thus, FDA insists on evidence that using the software meaningfully accelerates case handling without compromising accuracy. |

| 892.2060 | POK | CADx diagnosis | Software V&V,Standalone performance,Clinical Evaluation (MRMC) | Sensitivity,Specificity,ΔAUC,ROC plot,Predictive value | CADx tools influence diagnosis, FDA requires both strong algorithm accuracy and reader studies to show they help clinicians, ensuring the device is reliable, effective, and substantially equivalent to its predicate. Typically requires stronger ROC-based analytics than CADe. Ground truth may require pathology report or long-term follow up imaging. |

| 892.2090 |

QDQ QBS |

CADe/x | Software V&V,Standalone performance,Clinical Evaluation (MRMC) | Sensitivity,Specificity,ΔAUC,ROC plot,Predictive value,Dice | Combines testing requirements for CADe and CADx. |

Automated Radiological Image Processing Software (21 CFR 892.2050, Product Code QIH) 🔗

Device Types 🔗

This category covers AI-based image analysis software that involve quantitative measurements, image enhancement, or other processing to aid diagnosis. Product code QIH is defined for AI-driven radiological image processing for all pathologies. Examples include AI that segments organs or lesions, quantifies metrics (volumes, dimensions), or automates image processing tasks (e.g. CT calcium scoring). These usually output measurements or visualizations rather than definitive diagnostic conclusions.

Testing Requirements 🔗

- Software Verification & Validation (V&V): Comprehensive software V&V per FDA software guidance. Submit documentation of hazard analysis, requirements, design specs, traceability, and testing in accordance with FDA’s “Content of Premarket Submissions for Software” guidance. These devices are typically considered “Basic” Documentation level under 2023 guidance.

- Technical (Bench) Performance: Emphasis is on analytical validity – i.e. how accurately and reliably the software produces the intended output from the input data. Sponsors must define performance specifications for each quantitative function and provide supporting data that those specs are metfda.gov. For example, a lung nodule volume software would be tested for measurement accuracy against known-volume phantoms or radiologist manual measurements. A calcium scoring AI (e.g. CAC scoring) would be bench-tested against the standard scoring method. The FDA’s quantitative imaging guidance advises manufacturers to specify key performance metrics and demonstrate results for themfda.gov. This may include measurement bias and variance, test-retest reproducibility, segmentation Dice scores, etc., depending on the function. Tests should span the intended image acquisition variations (different scanners, protocols) and patient subgroups to ensure generalizability.

- Comparison to Reference Standard: When applicable, the AI’s outputs are compared to ground truth references. For instance, an AI that auto-measures an anatomical feature should be validated against expert manual measurements or known physical dimensions. Accuracy is quantified in clinically meaningful terms (e.g. ± error limits, correlation, sensitivity/specificity if classifying normal vs abnormal). Any clinically accepted performance targets should be met.

- Clinical Evaluation (as needed): If the software’s claim or intended use implies a clinical impact, some level of clinical validation may be expected. Often, however, QIH devices perform auxiliary tasks (measurements or image manipulations) where clinical utility is already well established. In such cases, FDA may not require a new prospective clinical study – demonstrating analytical accuracy may suffice. Sponsors should justify that the measured parameter is clinically accepted.

Why These Tests? 🔗

These tools don’t directly diagnose disease but provide quantitative data or processed images that clinicians rely on. Thus, technical performance is paramount – the device must be shown to be precise and accurate, since any significant errors could mislead diagnosis or treatment planning. FDA expects manufacturers to state the performance claims and back them with data, aligning with the principle of analytical validation. If the device automates a task previously done manually, it must perform at least as well as a human (or an accepted standard), which bench testing must confirm. Clinical validation is needed only to the extent that the quantitative output’s relevance to patient care needs confirmation (often already known). In summary, the testing ensures the AI reliably does what it claims (e.g. correctly segment a prostate MRI or measure a vessel diameter), so that clinicians can use the information with confidence.

Medical Image Analyzers (21 CFR 892.2070, Product Code MYN) 🔗

Device Types 🔗

These are concurrent computer-assisted detection (CADe) tools that analyze medical images (often X-rays) and mark regions of interest (ROIs) for the clinician. Examples: chest X-ray analyzers (e.g. lung nodule detection), dental X-ray caries detectors, etc. (see K241725 and K243239). These assist diagnosis by highlighting findings but do not make autonomous diagnoses.

Testing Requirements 🔗

- Software Verification & Validation (V&V): Comprehensive software V&V per FDA software guidance. Submit documentation of hazard analysis, requirements, design specs, traceability, and testing in accordance with FDA’s “Content of Premarket Submissions for Software” guidance. These devices are typically considered “Basic” Documentation level under 2023 guidance.

- Stand-Alone Algorithm Performance: A robust standalone performance assessment on independent test data is expected. Sponsors must report detection accuracy metrics – e.g. sensitivity, specificity, ROC/AUC, false-positive rates – on a “large”, representative test dataset. For example, the Aorta-CAD clearance reported high sensitivity (91.0%) and specificity (89.6%) on 5,000 images (see K213353). Testing should include stratified analysis by subcategories (lesion type, size, etc.) to ensure consistent performance across the intended population. Ground truth (“truthing”) must be well-defined (e.g. expert-annotated), and test data must be sequestered from training data.

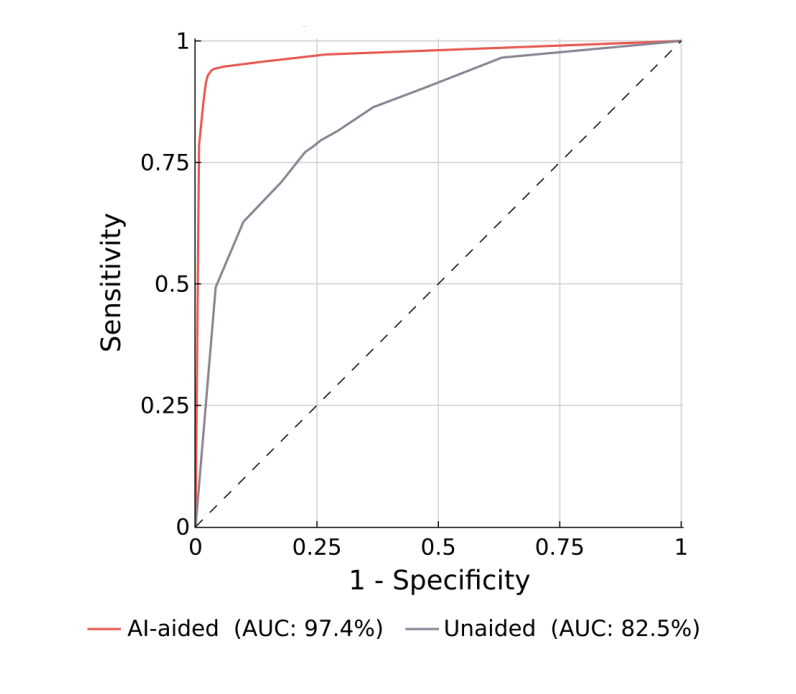

- Clinical Performance (Reader Study): FDA usually expects a clinical reader study (multiple readers on a set of cases, with and without the AI aid) to demonstrate the device’s impact on diagnostic performance. Because these CADe marks influence physician interpretation, sponsors must show that using the software improves clinical accuracy. For instance, Aorta-CAD underwent a fully-crossed multi-reader, multi-case (MRMC) study with 244 cases; radiologists’ AUCs for detecting aortic abnormalities improved significantly with AI assistance (p<0.001). Such studies compare “aided” vs. “unaided” readings to quantify the benefit. FDA’s 2020 CADe guidance emphasizes that standalone algorithm metrics alone may not be sufficient for CADe devices – a reader study is warranted especially if the new device or its predicate has different output or indications. Results from performance testing should demonstrate that the device improves reader performance in the intended use population.

Why These Tests? 🔗

Because Medical Image Analyzer CADe devices influence diagnostic decision-making, FDA mandates evidence that they are accurate and clinically effective. High standalone sensitivity is needed so true abnormalities aren’t missed, and reader studies must show that clinicians benefit from the tool. In short, the combination of algorithm accuracy and improved reader outcomes should demonstrate the device’s “is as safe and as effective” substantial equivalence. These tests address risks like missed findings or over-reliance on AI by proving the device’s reliability and its positive impact on radiologist performance.

Triage/Notification Software (21 CFR 892.2080, Product Codes QAS & QFM) 🔗

Device Types 🔗

These are radiological computer-aided triage (CADt) systems that prioritize urgent cases for review. They analyze images for time-sensitive findings and alert or reprioritize cases in a worklist. Importantly, they do not display diagnostic marks or findings – they only send a notification or change queue order. Product code QAS is defined as “radiological computer-assisted triage and notification software”. (QFM is a closely related code under the same regulation, specific to “prioritization software for lesions,” used similarly for triaging suspicious lesions on imagingaccessdata.fda.govaccessdata.fda.gov.) Examples: stroke detection alerts (flagging CT scans with a suspected large-vessel occlusion), pneumothorax triage on chest X-rays, or vertebral fracture triage. The standard of care reading process remains in place – these tools only accelerate the review of certain casesfederalregister.gov.

Testing Requirements 🔗

- Standalone Detection Performance: Even though the output is just a flag, the underlying algorithm typically must detect an abnormality to trigger the alert. Standalone test results for the detection algorithm are expectedfederalregister.gov. For example, if a triage tool flags “potential intracerebral hemorrhage,” the sensitivity and false-positive rate for hemorrhages on a representative dataset should be reported. High sensitivity is critical – missing a true critical case (false negative) is a key risk. False positives should be low enough not to overwhelm clinicians with unwarranted alerts.

- Triage Effectiveness (Clinical Benefit): Uniquely, these devices must demonstrate that they improve the timeliness of care, since that is their primary claim. FDA expects pre-specified performance measures related to triage efficacy – typically “time to review” or “time to notification” improvements. Testing protocols should simulate or analyze how much faster a case is read with the AI vs. without. For instance, a study might show that using the software moves critical cases higher in the worklist, resulting in an average review time of, say, 5 minutes instead of 30 minutes. This can be demonstrated via a reader study in a lab setting or via retrospective analysis of reading-time metrics.

- Software Verification & Validation (V&V): Comprehensive software V&V per FDA software guidance. Submit documentation of hazard analysis, requirements, design specs, traceability, and testing in accordance with FDA’s “Content of Premarket Submissions for Software” guidance. Testing should include ensuring the software does not remove cases from the worklist or otherwise interfere beyond notification (as stated in the classification, it must run in parallel to standard care). The notification mechanism should be tested for reliability (no crashes, timely alert generation). Usability testing is important here: the alert should be presented in a manner that the radiologist notices and understands. Human factors assessment may involve verifying that notifications are conspicuous and that users know how to respond.

Why These Tests? 🔗

Triage devices address the risk of delayed diagnosis in emergencies – their value is in speeding up care. Thus, FDA insists on evidence that using the software meaningfully accelerates case handling without compromising accuracy. The sensitivity and timing metrics directly relate to patient safety: a triage tool must reliably catch the urgent cases (minimize false negatives) and demonstrably get them seen sooner. At the same time, testing must ensure it doesn’t cause harm by, say, consistently false-alerting (which could distract clinicians or erode trust). By requiring performance characterization in diverse conditions and detailed algorithm descriptions, FDA ensures the sponsor has thoroughly evaluated where the AI works or fails. In sum, these controls make sure the triage software truly improves workflow outcomes (e.g. faster stroke treatment) and that users are aware of its proper use and limitations. The special controls, combined with general controls, mitigate the risks (like missed alerts or user over-reliance) to provide reasonable assurance of safety and effectiveness.

Diagnosis Aids for Suspicious Cancer Lesions (21 CFR 892.2060, Product Code POK) 🔗

Device Types 🔗

This group includes CADx software that generates diagnostic assessments (e.g., malignancy scores) for user-selected regions of interest (ROIs) in imaging studies. These tools support clinical decision-making, typically by aiding characterization of lesions suspicious for cancer.

All are regulated under 21 CFR 892.2060 and assigned product code POK: “Radiological computer-assisted diagnostic software for lesions suspicious for cancer.” Devices with this code are classified as Class II with special controls.

Testing Requirements 🔗

- Standalone Performance: FDA expects a large, representative dataset (multi-site, varied demographics, acquisition protocols). Performance metrics typically include ROC AUC, sensitivity, and specificity at clinically relevant thresholds. Some devices also report calibration curves or score binning metrics. For example, Koios DS demonstrated AUC 0.945 and sensitivity of 0.976 on over 1,500 retrospective cases (breast + thyroid combined) (FDA Summary).

- Clinical Performance (Reader Study): Because these devices offer diagnostic guidance (not just image analysis), sponsors must conduct a multi-reader, multi-case (MRMC) study. The goal is to show that use of the AI improves clinical performance compared to unaided reading or a legally marketed predicate. Koios DS reported improved reader AUC and specificity for thyroid nodules with the CADx aid.

- Subgroup Robustness and Bias Analysis: Performance should be broken down across key subgroups—e.g., age, scanner type, lesion size, acquisition parameters. This ensures generalizability and helps detect bias. For example, Koios DS analyzed performance by ultrasound probe frequency and found consistent AUCs across categories.

It is important to note that CADx faces stricter testing requirements than CADe, particularly regarding ROC AUC metrics.

ROC basics:

A Receiver-Operating-Characteristic (ROC) curve is just a graph that shows how well a test can sort “disease” from “no disease” across every possible cut-off.

• The farther the curve hugs the top-left corner, the better the test is at catching true positives without flooding you with false alarms.

• The single summary number is the AUC (Area Under the Curve)—think of it as a school grade from 0.5 (“coin-flip”) to 1.0 (“perfect”). A “stronger ROC” simply means a higher AUC and a curve closer to that top-left sweet spot.

In short, a “strong ROC” means the diagnostic score is consistently good at separating benign from malignant, and CADx devices need to demonstrate that robustness because clinicians rely directly on that score to decide what happens next.

CADx goes further—it tells the doctor what the marked thing probably is (e.g., “high-risk nodule”). That advice can sway treatment decisions—biopsy, surgery, no follow-up—so FDA wants very clear proof that the risk score is truly accurate across all cut-offs and patient subgroups. A high AUC (a “strong ROC”) shows the algorithm keeps its balance of true positives and false positives over the whole score range, giving doctors confidence that the number they act on won’t mislead them.

CADe only needs to point out things that might matter; if it misses something, the radiologist might still catch it, and if it flags too much, the doctor can ignore extra marks. A solid sensitivity test and a reader study that shows “radiologists find more stuff” is usually enough.

Why CADx scrutiny is tougher than CADe:

Additionally, the ground truth needed for CADx devices can differ from CADe:

| Element | CADe (Detection) | CADx (Diagnosis) |

|---|---|---|

| What must be “true” | Is an abnormality visible here? | Is the lesion benign or malignant? |

| Acceptable truth sources | • Radiologist consensus • Image-based markers (bounding boxes, masks) |

• Pathology/cytology report • Surgical resection findings • Long-term imaging stability (≥1–2 yr) if no tissue • Occasionally combined with radiologist ROI for location |

| FDA rationale | Finding errors are mitigated by human review; image experts are sufficient | Diagnostic mis-labeling directly affects treatment; clinical outcome confirmation needed |

Why These Tests? 🔗

POK devices influence diagnostic decision-making for potentially serious diseases (e.g., cancer). FDA therefore requires proof of both analytical and clinical validity. Reader studies ensure the device integrates safely into medical workflow.

Detection/Diagnosis Aids for Suspected Pathology (21 CFR 892.2090, Product Codes QDQ & QBS) 🔗

Device Types 🔗

This group includes CADe/CADx software that identifies specific high-impact pathologies. Notably, QDQ refers to “radiological computer-assisted detection/diagnosis software for lesions suspicious for cancer.” These are AI tools for cancer detection on imaging (e.g. mammography or lung CT cancer screening aids)accessdata.fda.govaccessdata.fda.gov. They may mark or characterize lesions (often assisting in distinguishing suspicious vs benign). QBS, on the other hand, is a product code for AI that detects fractures on radiographs (computer-assisted detection/diagnosis for fractures). Both codes fall under 21 CFR 892.2090 (Class II with special controls). These devices directly pinpoint clinically significant abnormalities that, if missed, could harm patients (cancers, fractures), so their testing is rigorous.

Testing Requirements 🔗

- Standalone Performance: Like other CADe, a large standalone study is required to quantify algorithm accuracy in detecting the target condition. Sensitivity is usually the paramount metric, as missing a cancer or fracture is a primary risk to mitigate. Specificity or false-positive rates per image must also be characterized, since too many false alerts can burden clinicians. Free-response ROC (FROC) analysis is common: sponsors plot sensitivity vs. average false positives per case. For example, a breast cancer CAD might report lesion-based sensitivity at a given FP rate, and an R0/AUC for case-level detection. FDA expects confidence intervals and possibly non-inferiority comparisons to predicates on the same dataset if feasiblefda.govfda.gov. The device’s standalone performance should be at least as good as the predicate’s; if there is a difference (e.g. new AI marks different lesion types), that could necessitate more clinical evidencefda.gov. Importantly, the test set must be independent and representative (multi-center images, various tumor sizes, fracture types, etc.). Any “truthing” (reference standard determination) should involve qualified experts, and variability in ground truth should be addressed (e.g. multiple radiologists consensus, with any discordance analyzed).

- Clinical Performance Study (Reader Study): Given these devices actually suggest diagnoses (e.g. “this lesion is suspicious”), FDA almost always requires a clinical assessment of reader performance with vs. without the devicefda.govfda.gov. This typically means an MRMC reader study. For instance, in a fracture-detection AI trial, multiple radiologists would read a set of X-rays with and without the AI’s marked fractures, and metrics like reader sensitivity and specificity are compared. If the predicate device had a known reader study result (as many earlier CADx did), the new device’s aided performance should be non-inferior or superior. FDA’s CADe guidance underscores that because “the reader is an integral part of the diagnostic process,” purely algorithm-centric testing may be insufficientfda.gov. The goal of the reader study is to show the AI meaningfully aids clinical diagnosis – e.g. radiologists catch more cancers with the CAD, or at least their performance is equivalent to using the predicate CADfda.govaccessdata.fda.gov. In our examples, BoneView (QBS) performed MRMC studies demonstrating improved reader AUC with the aid. We expect cancer detection AIs (QDQ) to do the same (historically, mammography CAD required extensive reader trials).

Why These Tests? 🔗

These devices target serious conditions (cancer, fractures) where diagnostic errors have high consequences. Therefore, FDA imposes the most stringent validation here – essentially proving both “analytical” and “clinical” validity. The standalone bench tests establish the AI’s raw ability to find the pathologyaccessdata.fda.gov, while the reader studies establish that this translates into a clinical benefit. In essence, the AI must demonstrate it makes doctors better at diagnosing the condition. For example, a breast lesion CAD should show radiologists catch as many cancers with the software as they did with the predicate CAD – if the new AI marks different lesions, the study must show those marks still help overall performance. These requirements address risks like false security (if the AI misses a cancer and the radiologist might otherwise catch it, the reader study will reveal if radiologists tended to skip unmarked lesions – a sign of over-reliance). The comprehensive testing and special controls (covering algorithm transparency, performance on subgroups, and user training) ensure a reasonable assurance of safety/effectiveness for these high-impact AI devicesaccessdata.fda.govaccessdata.fda.gov. Ultimately, by satisfying both robust standalone accuracy and demonstrated clinical effectiveness, the sponsor shows the device “perform[s] as well as” existing solutions and improves clinical outcomes in its intended useaccessdata.fda.gov.

Additional Considerations 🔗

- Good Machine Learning Practice & Bias Assessment: FDA expects sponsors to use sound data practices – e.g. using an independent test set, avoiding overfitting, and assessing algorithm performance across subpopulations. Many recent clearances mention evaluating performance by demographic subsets or imaging subgroups (age ranges, scanner models) as part of the technical validation. This ties into FDA’s push for addressing algorithm bias and ensuring generalizability.

- IMDRF “Clinical Evaluation” Principles: The FDA-recognized IMDRF framework breaks validation into Analytical Validation (does the software correctly output what it’s supposed to?) and Clinical Validation (does that output achieve the intended clinical purpose?)fda.govfda.gov. In practice, the “standalone performance” testing corresponds to analytical validation, and the reader/clinical studies correspond to clinical validation of the SaMD. FDA’s adoption of the “Software as a Medical Device: Clinical Evaluation” guidance reinforces this approachfda.govfda.gov. For our device categories: QIH devices may lean heavily on analytical validation (since their output is a measurement), whereas CADe/CADx devices require both analytical and clinical validation.

- Regulatory Terminology: Each of these product codes was created via the De Novo pathway with special controls. Special controls are essentially requirements like those discussed (performance testing, labeling disclosures, etc.) that manufacturers must meet for any device of that type. For example, the triage software special controls explicitly list the testing and labeling elements needed. This acts as a checklist for both industry and FDA reviewers to ensure all risk mitigations (from algorithm accuracy to user training) are addressed.

- Communication of Results (Labeling): A crucial part of testing is how results are presented to users and documented in labeling. FDA wants performance results communicated clearly so end-users understand the device’s limitations. Labeling must include a summary of validation results (e.g. “sensitivity X% on dataset of Y cases”) and intended use condition. This manages user expectations and aligns with FDA’s push for transparency in AI/ML devices.

- Human Factors & Training: No formal human factors or usability testing was explicitly stated in any of the 26 510(k) summaries. Critical errors due to usability issues are unlikely, and usability is typically addressed as part of reader studies.

- Cybersecurity Testing: Cybersecurity is a core safety feature. As software devices, sponsors must provide a threat model, software bill of materials (SBOM), and security test results, along with other cybersecurity documentation, in every 510(k) submission to demonstrate that the AI will remain safe and effective in today's challenging cybersecurity environment.

Sources 🔗

- FDA Guidance “Computer-Assisted Detection Devices Applied to Radiology Images” (2020)

- FDA Guidance “Technical Performance Assessment of Quantitative Imaging” (2022)

- FDA-recognized IMDRF Guidance “SaMD: Clinical Evaluation”

- FDA’s Federal Register notice on Radiological Triage Software Classification

- FDA Product Code Classification database

- Various 510(k) Summaries (FDA Accessdata) for listed devices