Executive Summary 🔗

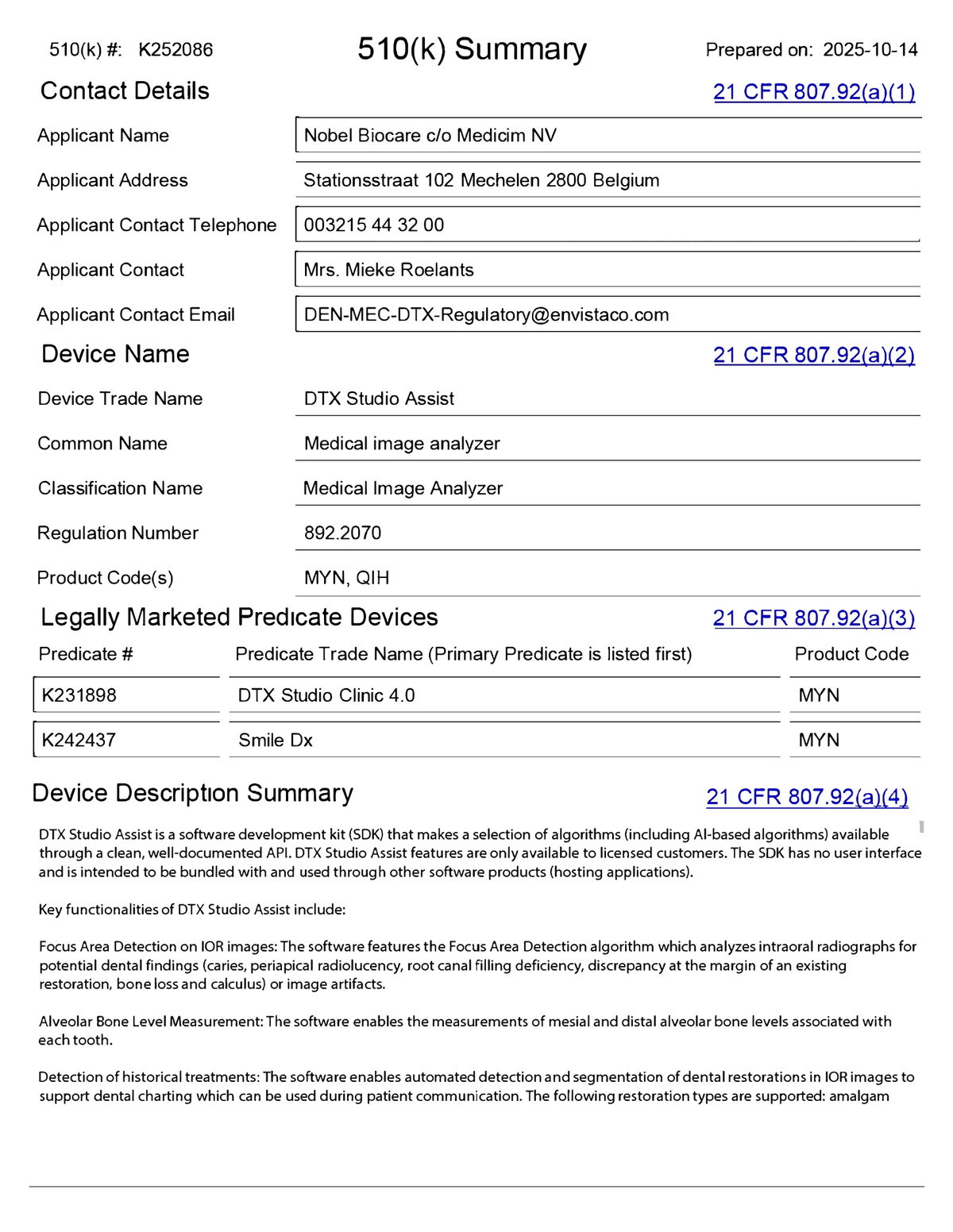

This submission for Nobel Biocare’s DTX Studio Assist (K252086) represents a strategically astute approach to clearing a dental AI/ML platform. Rather than marketing a standalone application, the device is a Software Development Kit (SDK) intended for integration into third-party dental software. This “headless” model, which provides structured data output via an API without a native user interface, significantly reduces the regulatory burden associated with user-facing components and human factors.

The submission employs a dual-predicate strategy, leveraging DTX Studio Clinic 4.0 (K231898) for its core CADe functionality and Smile Dx (K242437) for its more advanced segmentation and measurement features. This allows the sponsor to claim substantial equivalence by demonstrating that the subject device merely combines existing, cleared functionalities. A key aspect of this submission is the reuse of clinical data from a previous clearance (K221921) for the Focus Area Detection (CADe) algorithm, highlighting an efficient lifecycle management approach. The standalone performance testing for the newer algorithms (Restoration Detection, Alveolar Bone Level Measurement, and Anatomy Segmentation) is robust, with large, diverse datasets and clear, clinically relevant metrics. This is a well-executed, low-risk submission that provides a strong template for clearing AI/ML platforms as enabling technologies rather than final products.

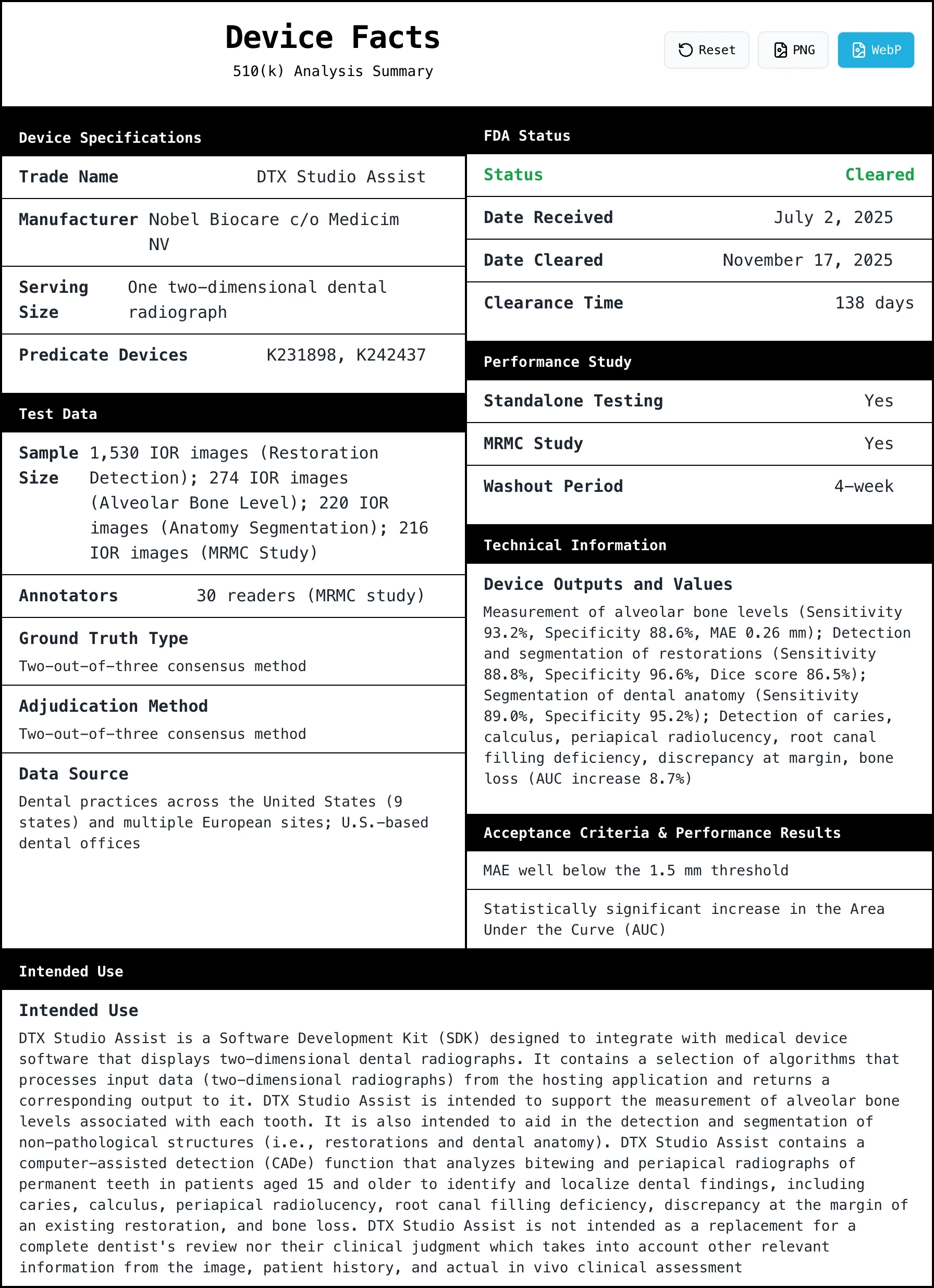

Performance Data Summary 🔗

Below is a description of all outputs and a quick snapshot of how they are validated.

| Device Output Name | Acceptance Criteria | Observed Values | Test Set Description | Training Set Description |

| Focus Area Detection (CADe) | Statistically significant increase in AUC | AUC increased by 8.7% (p < 0.001) | N=216 images (periapical & bitewing) from U.S. dental offices. 30 dentist readers. | Not specified in summary (reused from K221921). |

| Restoration Detection | Not specified in summary. | Sensitivity: 88.8%, Specificity: 96.6%, Mean Dice: 86.5% | N=1,530 images (41% bitewing, 59% periapical) from US & Europe. Diverse demographics and scanner types. | Not specified in summary. |

| Alveolar Bone Level (ABL) Measurement | MAE < 1.5 mm | Sensitivity: 93.2%, Specificity: 88.6%, Mean Average Error: 0.26 mm | N=274 images (45% bitewing, 55% periapical) from 30 practices in US & Europe. | Not specified in summary. |

| Anatomy Segmentation | Not specified in summary. | Sensitivity: 89.0%, Specificity: 95.2%, Mean Dice: 86.5% | N=220 images (51% bitewing, 49% periapical) from US & Europe. | Not specified in summary. |

Page 4: Crafting the Indications for Use 🔗

The Indications for Use (IFU) is arguably the most important section of a 510(k), as it defines the device's legal marketing claims and regulatory boundaries. The sponsor makes several strategic choices here to establish a narrow, defensible scope.

Defining the device as an SDK is a key move. This frames the product as a component for other manufacturers, not a tool for clinicians directly, likely simplifying the regulatory path by offloading human factors and UI concerns to the integrating software. The IFU also clearly states the device is an adjunct ("second reader") and not a replacement for professional judgment. This is a classic and essential de-risking strategy for any CADe/CADx device, as it keeps the clinician firmly in the loop. Finally, limiting the indication to patients aged 15 and older and permanent teeth avoids the complexities and higher data requirements associated with pediatric dentistry.

A tightly crafted IFU that minimizes risk, clearly defines the user and patient populations, and specifies the device’s exact role in the clinical workflow is the foundation of a successful submission. By explicitly stating what the device is not (a replacement for a dentist), the sponsor proactively manages liability and sets clear expectations for the FDA.

For more on how the IFU fits into the broader submission, see Anatomy of an AI/ML SaMD 510(k) with Examples.

Page 5: The Dual-Predicate Strategy 🔗

The choice of two predicate devices is a notable and effective strategy.

The primary predicate, DTX Studio Clinic 4.0 (K231898), is the sponsor's own previous product, which contains the core CADe functionality. This makes the argument for substantial equivalence for that part of the algorithm straightforward. The secondary predicate, Smile Dx (K242437), is used to cover the additional functionalities like segmentation and measurement. This "predicate stacking" allows the sponsor to introduce a more feature-rich device by arguing that it is merely a combination of functionalities that have already been cleared by the FDA. The emphasis on the device being an API-driven SDK with no user interface is a recurring theme, reinforcing the idea that this is a component, not a complete medical device in the traditional sense.

Teaching Moment: When expanding the features of a cleared device, using a dual-predicate strategy can be very effective. It allows you to anchor your submission to a primary predicate that is very similar (often your own previous version) while using a secondary predicate to justify the addition of new, specific features. This piecemeal approach can be less burdensome than trying to find a single predicate that matches all aspects of your new device.

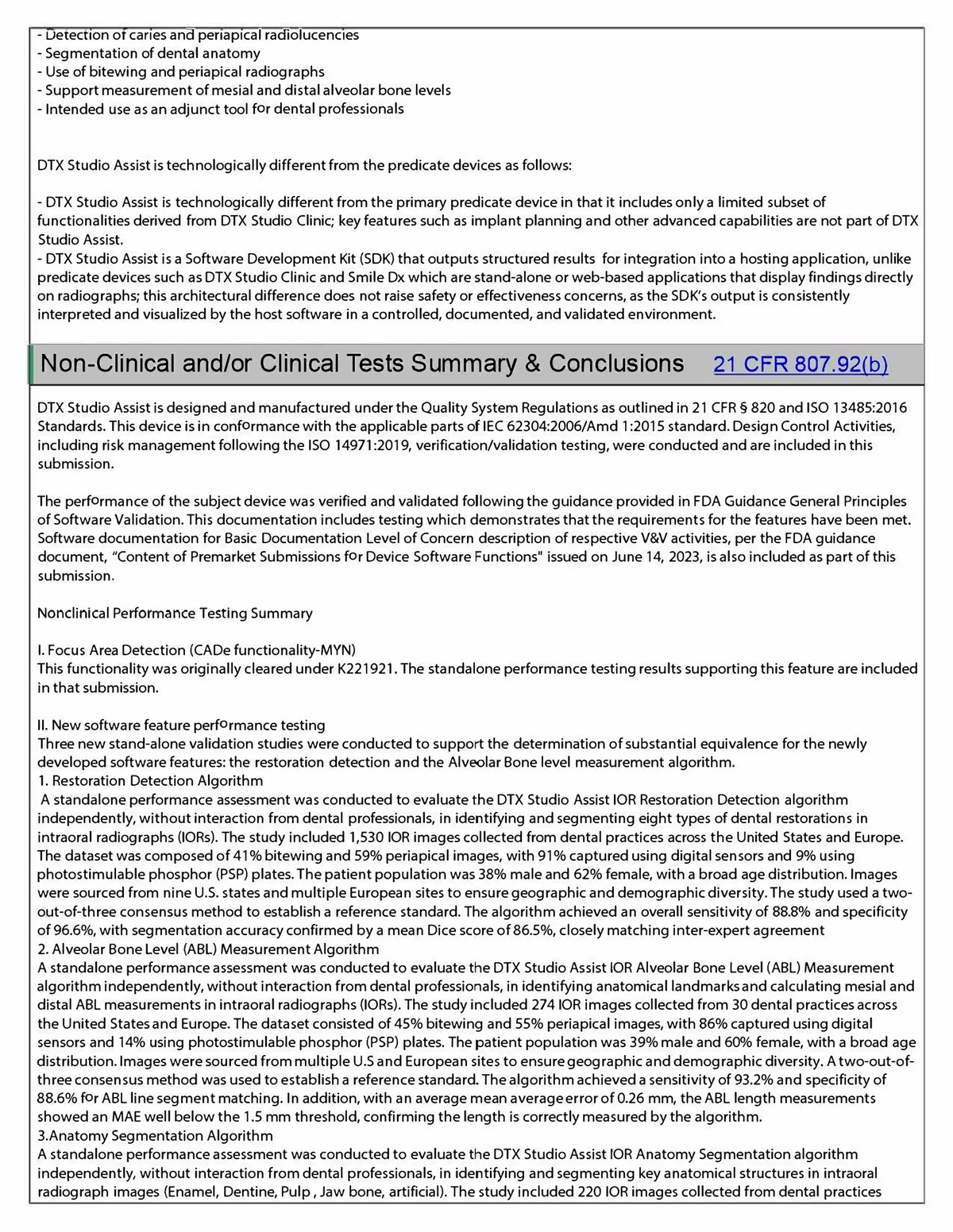

Page 7: Leveraging Existing Data in Performance Testing 🔗

This section clearly separates the validation strategy for the old and new algorithms, demonstrating an efficient product lifecycle approach.

Strategic Text:

For the established CADe functionality, the sponsor simply references the data from a prior 510(k) (K221921). This is an efficient and perfectly acceptable approach. For the new features, they conduct new standalone testing. The sponsor highlights the diversity of the datasets used for this new testing, explicitly mentioning collection from the United States and Europe and multiple scanner types. This is done to demonstrate the generalizability and robustness of the algorithms, heading off potential FDA questions about dataset bias.

Teaching Moment: Don't reinvent the wheel. If you have already submitted performance data for a specific algorithm component in a previous 510(k), you can and should leverage that data. For new components, the key is to demonstrate performance on a dataset that is representative of the intended patient population and clinical environments.

Deep Dive: The quality of the ground truth is paramount in these studies. For a deeper look into this topic, see the Definitive Guide to AI/ML SaMD Ground Truthing.

Page 8: Proving Clinical Benefit with an MRMC Study 🔗

This section summarizes the results of the pivotal MRMC study for the CADe functionality, which was re-used from K221921.

Strategic Text:

The choice of an MRMC study is the gold standard for demonstrating the clinical benefit of a diagnostic or detection aid. The primary endpoint, a statistically significant increase in AUC, is a standard and well-accepted metric for this type of device. The sponsor is clear about the statistical method used (AFROC), which is appropriate for localization tasks. The reported result—an 8.7% increase in AUC with a p-value < 0.001—is a strong finding that clearly demonstrates the device's benefit to clinicians.

Teaching Moment: For a CADe or CADx device that is intended to assist a clinician, a standalone performance test is often not enough. The FDA wants to see that the device actually helps a human user perform better. An MRMC study, which compares clinician performance with and without the AI tool, is the most robust way to provide this evidence.

Deep Dive: For detailed guidance on setting appropriate performance goals, refer to the Definitive Guide to AI/ML SaMD Acceptance Criteria. For planning such a study, see How to Plan MRMC and Standalone Studies for a 3-Month 510(k).

Conclusion 🔗

The clearance of DTX Studio Assist (K252086) highlights the value of strategic device definition and predicate selection. By characterizing the product as a "headless" SDK and leveraging a dual-predicate strategy, the sponsor minimized regulatory burden while robustly validating the technology. This submission offers a replicable template for AI/ML platform developers: simplify the user interface to reduce human factors testing, reuse existing clinical data where possible, and clearly separate the validation of new versus established algorithms.

If you want to predicate off K252086, or you have an AI/ML-based device and you want guaranteed FDA clearance and a submission in as little as three months,